Tutorial

In this tutorial we will do multilabel classification on PASCAL VOC 2012.

Multilabel classification is a generalization of multiclass classification, where each instance (image) can belong to many classes. For example, an image may both belong to a “beach” category and a “vacation pictures” category. In multiclass classification, on the other hand, each image belongs to a single class.

Caffe supports multilabel classification through the SigmoidCrossEntropyLoss layer, and we will load data using a Python data layer. Data could also be provided through HDF5 or LMDB data layers, but the python data layer provides endless flexibility, so that’s what we will use.

Preliminaries

First, make sure you compile caffe using

WITH_PYTHON_LAYER := 1Second, download PASCAL VOC 2012. It’s available here:

Third, import modules:

1 | import sys |

- Fourth, set data directories and initialize caffe

1 | # set data root directory, e.g: |

Define network prototxts

- Let’s start by defining the nets using caffe.NetSpec. Note how we used the SigmoidCrossEntropyLoss layer. This is the right loss for multilabel classification. Also note how the data layer is defined.

1 | # helper function for common structures |

Write nets and solver files

- Now we can crete net and solver prototxts. For the solver, we use the CaffeSolver class from the “tools” module

1 | workdir = './pascal_multilabel_with_datalayer' |

This net uses a python datalayer: ‘PascalMultilabelDataLayerSync’, which is defined in ‘./pycaffe/layers/pascal_multilabel_datalayers.py’.

Take a look at the code. It’s quite straight-forward, and gives you full control over data and labels.

Now we can load the caffe solver as usual.

1 | solver = caffe.SGDSolver(osp.join(workdir, 'solver.prototxt')) |

BatchLoader initialized with 5717 images

PascalMultilabelDataLayerSync initialized for split: train, with bs: 128, im_shape: [227, 227].

BatchLoader initialized with 5823 images

PascalMultilabelDataLayerSync initialized for split: val, with bs: 128, im_shape: [227, 227].

1 | print solver.net.blobs['data'].data.shape # (128, 3, 227, 227) |

(128, 3, 227, 227)

(128, 20)

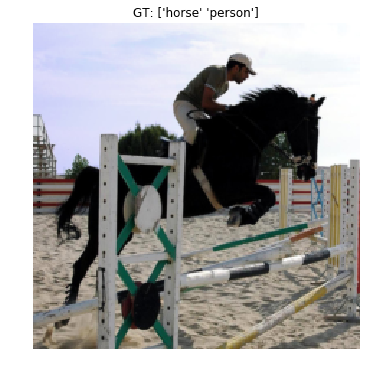

- Let’s check the data we have loaded.

1 | transformer = tools.SimpleTransformer() # This is simply to add back the bias, re-shuffle the color channels to RGB, and so on... |

(3, 227, 227)

(20,)

[ 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 0. 1. 0. 1. 0. 0. 0.

1. 0.]

- NOTE: we are readin the image from the data layer, so the resolution is lower than the original PASCAL image.

Train a net

- Let’s train the net. First, though, we need some way to measure the accuracy. Hamming distance is commonly used in multilabel problems. We also need a simple test loop. Let’s write that down.

1 | def hamming_distance(gt, est): |

- Alright, now let’s train for a while

1 | %%time |

itt:100 accuracy:0.9591

itt:200 accuracy:0.9599

itt:300 accuracy:0.9596

itt:400 accuracy:0.9584

itt:500 accuracy:0.9598

itt:600 accuracy:0.9590

- Great, the accuracy is increasing, and it seems to converge rather quickly. It may seem strange that it starts off so high but it is because the ground truth is sparse. There are 20 classes in PASCAL, and usually only one or two is present. So predicting all zeros yields rather high accuracy. Let’s check to make sure.

1 | %%time |

Baseline accuracy:0.9241

CPU times: user 40.4 s, sys: 864 ms, total: 41.3 s

Wall time: 41.3 s

Look at some prediction results

1 | test_net = solver.test_nets[0] |

['aeroplane' 'bicycle' 'bird' 'boat' 'bottle' 'bus' 'car' 'cat' 'chair'

'cow' 'diningtable' 'dog' 'horse' 'motorbike' 'person' 'pottedplant'

'sheep' 'sofa' 'train' 'tvmonitor']

gt [0 0 0 0 0 1 1 0 0 0 0 0 0 0 1 0 0 0 0 0]

est [0 0 0 0 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0]

gt [0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0]

est [0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0 0 0 0 0]

gt [0 0 1 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0]

est [0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0]

gt [0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0]

est [0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0]

gt [0 0 0 0 0 0 0 0 1 0 0 1 0 0 1 0 0 0 0 0]

est [0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 0]

Reference

History

- 20180816: created.