R-CNN

introduction

R-CNN is a state-of-the-art detector that classifies region proposals by a finetuned Caffe model. For the full details of the R-CNN system and model, refer to its project site and the paper:

Rich feature hierarchies for accurate object detection and semantic segmentation. Ross Girshick, Jeff Donahue, Trevor Darrell, Jitendra Malik. CVPR 2014. Arxiv 2013.

In this example, we do detection by a pure Caffe edition of the R-CNN model for ImageNet. The R-CNN detector outputs class scores for the 200 detection classes of ILSVRC13. Keep in mind that these are raw one vs. all SVM scores, so they are not probabilistically calibrated or exactly comparable across classes. Note that this off-the-shelf model is simply for convenience, and is not the full R-CNN model.

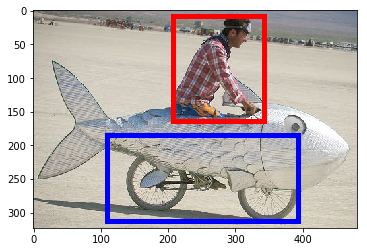

Let’s run detection on an image of a bicyclist riding a fish bike in the desert (from the ImageNet challenge—no joke).

selective search

First, we’ll need region proposals and the Caffe R-CNN ImageNet model:

Selective Search is the region proposer used by R-CNN. The selective_search_ijcv_with_python Python module takes care of extracting proposals through the selective search MATLAB implementation.

clone repo

1 | cd $CAFFE_ROOT/caffe/python |

install matlab

Install matlab and run demo.m file to compile functions

see here

Notice: Restart computer for Solving Errors:

OSError: [Errno 2] No such file or directory

compile matlab functions

1 | cd caffe/python/caffe/selective_search_ijcv_with_python |

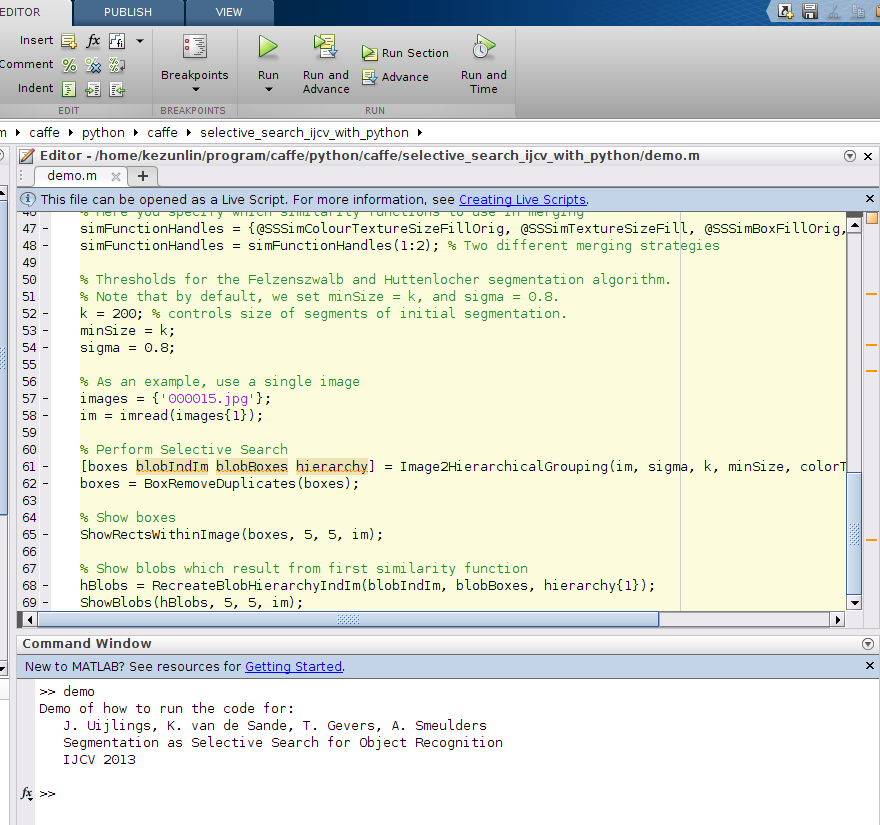

run demo in matlab

origin image

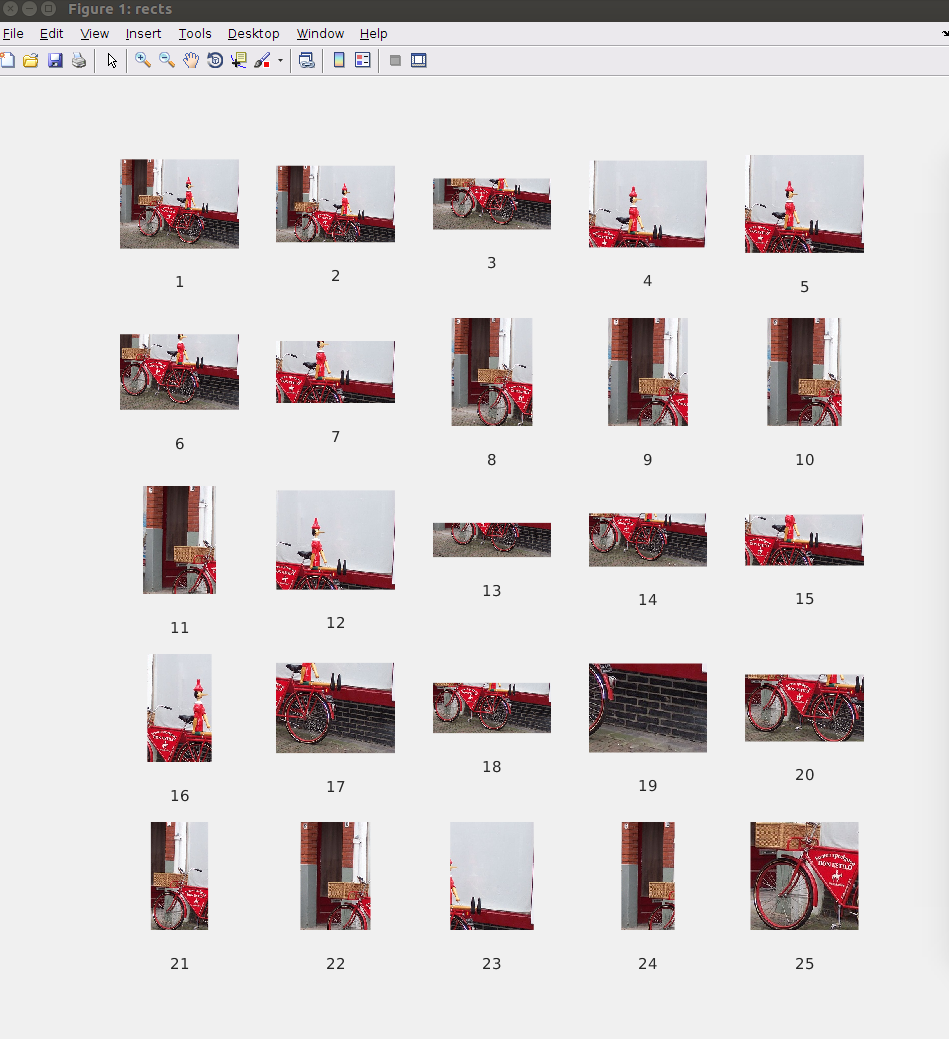

region results

detect regions

Run scripts to get the Caffe R-CNN ImageNet model.

1 | ./scripts/download_model_binary.py models/bvlc_reference_rcnn_ilsvrc13 |

With that done, we’ll call the bundled detect.py to generate the region proposals and run the network. For an explanation of the arguments, do ./detect.py --help.

1 | cd caffe/examples/ |

...

I1129 15:02:22.498908 3483 net.cpp:242] This network produces output fc-rcnn

I1129 15:02:22.498919 3483 net.cpp:255] Network initialization done.

I1129 15:02:22.577332 3483 upgrade_proto.cpp:53] Attempting to upgrade input file specified using deprecated V1LayerParameter: ../models/bvlc_reference_rcnn_ilsvrc13/bvlc_reference_rcnn_ilsvrc13.caffemodel

I1129 15:02:22.685262 3483 upgrade_proto.cpp:61] Successfully upgraded file specified using deprecated V1LayerParameter

I1129 15:02:22.685796 3483 upgrade_proto.cpp:67] Attempting to upgrade input file specified using deprecated input fields: ../models/bvlc_reference_rcnn_ilsvrc13/bvlc_reference_rcnn_ilsvrc13.caffemodel

I1129 15:02:22.685804 3483 upgrade_proto.cpp:70] Successfully upgraded file specified using deprecated input fields.

W1129 15:02:22.685809 3483 upgrade_proto.cpp:72] Note that future Caffe releases will only support input layers and not input fields.

Loading input...

selective_search_rcnn({'/home/kezunlin/program/caffe/examples/images/fish-bike.jpg'}, '/tmp/tmpkOe6J0.mat')

/home/kezunlin/program/caffe/python/caffe/detector.py:140: VisibleDeprecationWarning: using a non-integer number instead of an integer will result in an error in the future

crop = im[window[0]:window[2], window[1]:window[3]]

/home/kezunlin/program/caffe/python/caffe/detector.py:174: VisibleDeprecationWarning: using a non-integer number instead of an integer will result in an error in the future

context_crop = im[box[0]:box[2], box[1]:box[3]]

/usr/local/lib/python2.7/dist-packages/skimage/transform/_warps.py:84: UserWarning: The default mode, 'constant', will be changed to 'reflect' in skimage 0.15.

warn("The default mode, 'constant', will be changed to 'reflect' in "

/home/kezunlin/program/caffe/python/caffe/detector.py:177: VisibleDeprecationWarning: using a non-integer number instead of an integer will result in an error in the future

crop[pad_y:(pad_y + crop_h), pad_x:(pad_x + crop_w)] = context_crop

Processed 1565 windows in 15.899 s.

/usr/local/lib/python2.7/dist-packages/pandas/core/generic.py:1299: PerformanceWarning:

your performance may suffer as PyTables will pickle object types that it cannot

map directly to c-types [inferred_type->mixed,key->block1_values] [items->['prediction']]

return pytables.to_hdf(path_or_buf, key, self, **kwargs)

Saved to _temp/det_output.h5 in 0.082 s.

This run was in GPU mode. For CPU mode detection, call detect.py without the --gpu argument.

process regions

Running this outputs a DataFrame with the filenames, selected windows, and their detection scores to an HDF5 file.

(We only ran on one image, so the filenames will all be the same.)

1 | import numpy as np |

(1565, 5)

row (5,)

prediction (200,)

<class 'pandas.core.series.Series'>

prediction [-2.60202, -2.87814, -3.0061, -2.77251, -2.077...

ymin 152.958

xmin 159.692

ymax 261.702

xmax 340.586

Name: /home/kezunlin/program/caffe/examples/images/fish-bike.jpg, dtype: object

1570 regions were proposed with the R-CNN configuration of selective search. The number of proposals will vary from image to image based on its contents and size – selective search isn’t scale invariant.

In general, detect.py is most efficient when running on a lot of images: it first extracts window proposals for all of them, batches the windows for efficient GPU processing, and then outputs the results.

Simply list an image per line in the images_file, and it will process all of them.

Although this guide gives an example of R-CNN ImageNet detection, detect.py is clever enough to adapt to different Caffe models’ input dimensions, batch size, and output categories. You can switch the model definition and pretrained model as desired. Refer to python detect.py --help for the parameters to describe your data set. There’s no need for hardcoding.

Anyway, let’s now load the ILSVRC13 detection class names and make a DataFrame of the predictions. Note you’ll need the auxiliary ilsvrc2012 data fetched by data/ilsvrc12/get_ilsvrc12_aux.sh.

1 | import numpy as np |

(200, 2)

name synset_id

0 accordion n02672831

1 airplane n02691156

2 ant n02219486

3 antelope n02419796

4 apple n07739125

1 | #print type(df.prediction) # <class 'pandas.core.series.Series'> |

(1565,)

(200,)

(1565, 200)

name accordion airplane ant antelope apple armadillo artichoke

0 -2.602018 -2.878137 -3.006104 -2.772514 -2.077227 -2.590448 -2.414262

1 -2.997767 -3.312270 -2.878942 -3.434367 -2.227469 -2.492260 -2.383878

2 -2.476110 -3.145484 -2.377191 -2.684406 -2.289587 -2.428077 -2.390187

3 -2.362699 -2.784188 -1.981096 -2.664146 -2.207042 -2.299127 -2.181105

4 -2.929469 -2.323617 -2.755007 -3.165601 -2.188648 -2.486410 -2.505435

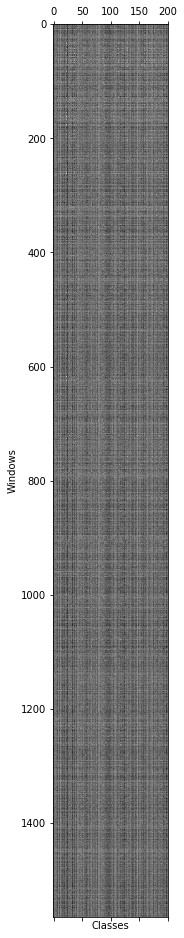

Let’s look at the activations.

1 | plt.gray() |

Now let’s take max across all windows and plot the top classes.

1 | max_s = predictions_df.max(0) |

name

person 1.839882

bicycle 0.855625

unicycle 0.085192

motorcycle 0.003604

turtle -0.030388

banjo -0.114999

electric fan -0.220595

cart -0.225192

lizard -0.365949

helmet -0.477555

dtype: float32

The top detections are in fact a person and bicycle.

Picking good localizations is a work in progress; we pick the top-scoring person and bicycle detections.

1 | i = predictions_df['person'].argmax() # 70 rect |

Top detection:

name

person 1.839882

swimming trunks -1.157806

turtle -1.168884

tie -1.217267

rubber eraser -1.246662

dtype: float32

Second-best detection:

name

bicycle 0.855625

unicycle -0.334367

scorpion -0.824552

lobster -0.965544

lamp -1.076224

dtype: float32

1 | # Find, print, and display the top detections: person and bicycle. |

Top detection:

name

person 1.839882

swimming trunks -1.157806

turtle -1.168884

tie -1.217267

rubber eraser -1.246662

dtype: float32

Second-best detection:

name

bicycle 0.855625

unicycle -0.334367

scorpion -0.824552

lobster -0.965544

lamp -1.076224

dtype: float32

((207.792, 7.6959999999999997), 134.71799999999999, 155.88200000000001)

((108.706, 184.70400000000001), 284.78999999999996, 127.98399999999998)

That’s cool. Let’s take all ‘bicycle’ detections and NMS them to get rid of overlapping windows.

1 | def nms_detections(dets, overlap=0.3): |

1 | scores = predictions_df['bicycle'] # (1565,) |

(1565, 5)

(181, 5)

1 | print nms_dets[:3] |

[[ 108.706 184.704 393.496 312.688 0.85562503]

[ 0. 14.43 397.344 323.27 -0.73134482]

[ 131.794 202.982 249.196 290.562 -1.26836455]]

Show top 3 NMS’d detections for ‘bicycle’ in the image and note the gap between the top scoring box (red) and the remaining boxes.

1 | plt.imshow(im) |

scores: [ 0.85562503 -0.73134482 -1.26836455]

This was an easy instance for bicycle as it was in the class’s training set. However, the person result is a true detection since this was not in the set for that class.

You should try out detection on an image of your own next!

(Remove the temp directory to clean up, and we’re done.)

1 | !rm -rf _temp |

Reference

History

- 20180816: created.