Series

- Getting Started with Nvidia Jetson Nano

- how to install vscode on Nvidia Jetson Nano

- how to use vscode remote-ssh for Linux arm64 aarch64 platform such as Nvidia Jetson TX1 TX2 Nano

Guide

Jetson Family

- Jetson TX1 Developer Kit

- Jetson TX2 Developer Kit

- Jetson AGX Xaiver Developer Kit

- Jetson Nano Developer Kit

SDKs and Tools

- NVIDIA JetPack

- NVIDIA DeepStream SDK

- NVIDIA DIGITS for training

JetPack includes:

• Full desktop Linux with NVIDIA drivers

• AI and Computer Vision libraries and APIs

• Developer tools

• Documentation and sample code

Recommended System Requirements

Training GPU:

- Maxwell, Pascal, Volta, or Turing-based GPU (ideally with at least 6GB video memory) optionally, AWS P2/P3 instance or Microsoft Azure N-series

- Ubuntu 16.04/18.04 x86_64

Deployment:

- Jetson Nano Developer Kit with JetPack 4.2 or newer (Ubuntu 18.04 aarch64).

- Jetson Xavier Developer Kit with JetPack 4.0 or newer (Ubuntu 18.04 aarch64)

- Jetson TX2 Developer Kit with JetPack 3.0 or newer (Ubuntu 16.04 aarch64).

- Jetson TX1 Developer Kit with JetPack 2.3 or newer (Ubuntu 16.04 aarch64).

Jetson Nano Developer Kit

Jetson Nano Device

Jetson Nano was introduced in April 2019 for only $99.

jetson nano

- microSD card slot for main storage

- 40-pin expansion header

- Micro-USB port for 5V power input or for data

- Gigabit Ethernet port

- USB 3.0 ports (x4)

- HDMI output port

- DisplayPort connector

- DC Barrel jack for 5V power input

- MIPI CSI camera connector

power input: 3 and 8

camera: 9 (MIPI CSI camera)

green LED (D53) close to the micro USB port should turn green

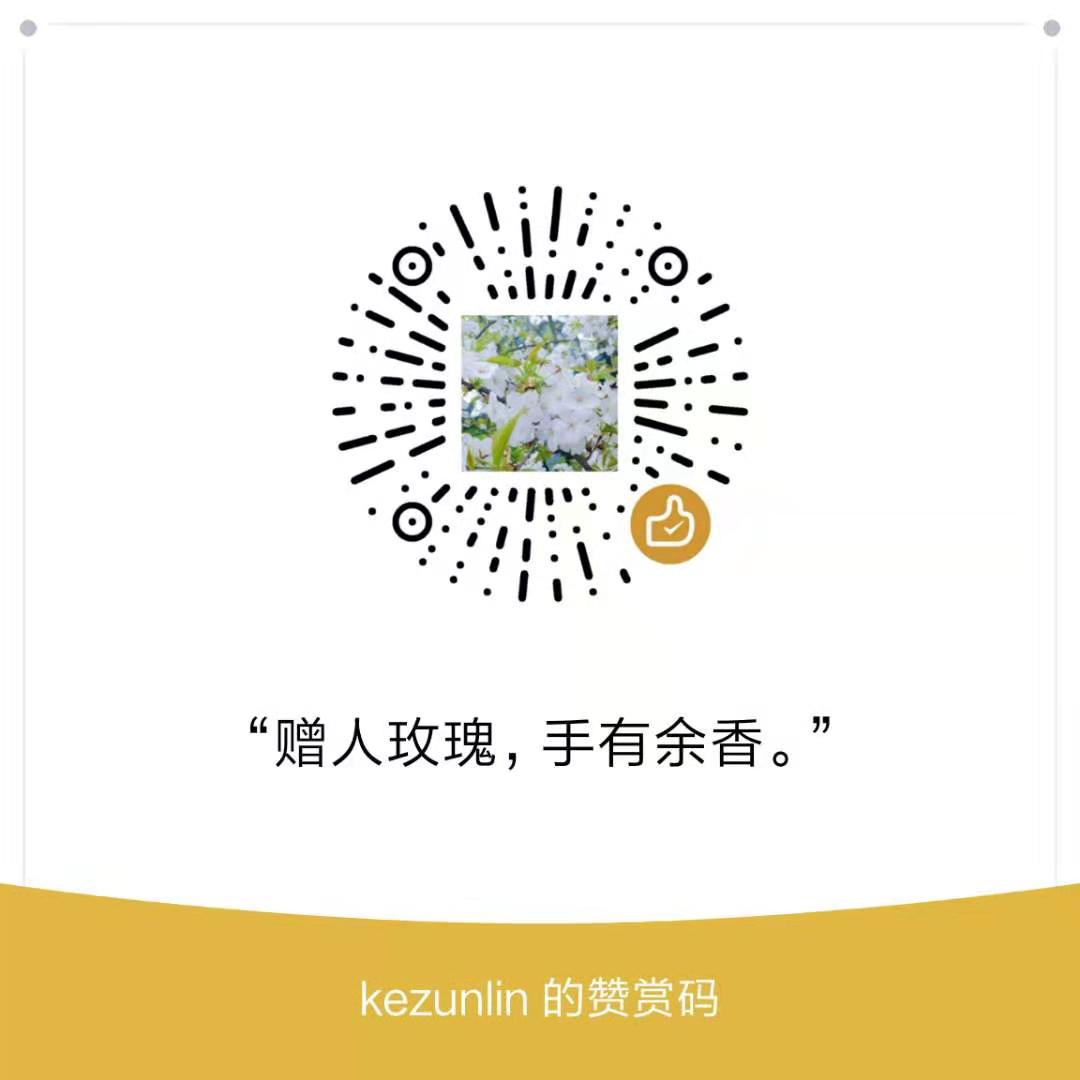

inference performance

multiple cameras with jetson nano

Write Image to the microSD Card

- Download the jetson-nano-sd-card-image-r3223.zip

- Format the microSD card to ExFAT if it’s a 64Gb or higher card, and to FAT if it’s less.

- Use etcher or linux command to write image to microSD.

Image size about 5G, so be patient to download

Recommend useetherto write image to write image to microSD

linux command to write image to microSD

$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.8G 0 7.8G 0% /dev

tmpfs 1.6G 18M 1.6G 2% /run

/dev/sdb6 184G 162G 13G 93% /

tmpfs 7.8G 71M 7.8G 1% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/sdb5 453M 157M 270M 37% /boot

tmpfs 1.6G 56K 1.6G 1% /run/user/1000

/dev/sdb4 388G 337G 52G 87% /media/kezunlin/Workspace

/dev/sdc1 30G 32K 30G 1% /media/kezunlin/nano

$ dmesg | tail | awk '$3 == "sd" {print}'

#In this example, we can see the 32GB microSD card was assigned /dev/sdc:

[ 613.537818] sd 4:0:0:0: Attached scsi generic sg2 type 0

[ 613.940079] sd 4:0:0:0: [sdc] 62333952 512-byte logical blocks: (31.9 GB/29.7 GiB)

[ 613.940664] sd 4:0:0:0: [sdc] Write Protect is off

[ 613.940672] sd 4:0:0:0: [sdc] Mode Sense: 87 00 00 00

[ 613.942730] sd 4:0:0:0: [sdc] Write cache: disabled, read cache: enabled, doesnt support DPO or FUA

[ 613.956666] sd 4:0:0:0: [sdc] Attached SCSI removable disk

# Use this command to write the zipped SD card image to the microSD card:

$ /usr/bin/unzip -p ~/Downloads/jetson-nano-sd-card-image-r3223.zip | sudo /bin/dd of=/dev/sdc bs=1M status=progress

0+167548 records in

0+167548 records out

12884901888 bytes (13 GB, 12 GiB) copied, 511.602 s, 25.2 MB/s

# 12 partitions generated by the writing process ???

$ sudo fdisk -l

GPT PMBR size mismatch (25165823 != 62333951) will be corrected by w(rite).

Disk /dev/sde: 29.7 GiB, 31914983424 bytes, 62333952 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: E696E264-F2EA-434A-900C-D9ACA2F99E43

Device Start End Sectors Size Type

/dev/sde1 24576 25165790 25141215 12G Linux filesystem

/dev/sde2 2048 2303 256 128K Linux filesystem

/dev/sde3 4096 4991 896 448K Linux filesystem

/dev/sde4 6144 7295 1152 576K Linux filesystem

/dev/sde5 8192 8319 128 64K Linux filesystem

/dev/sde6 10240 10623 384 192K Linux filesystem

/dev/sde7 12288 13439 1152 576K Linux filesystem

/dev/sde8 14336 14463 128 64K Linux filesystem

/dev/sde9 16384 17663 1280 640K Linux filesystem

/dev/sde10 18432 19327 896 448K Linux filesystem

/dev/sde11 20480 20735 256 128K Linux filesystem

/dev/sde12 22528 22687 160 80K Linux filesystem

Partition table entries are not in disk order.

# When the dd command finishes, eject the disk device from the command line:

$ sudo eject /dev/sdc

# Physically remove microSD card from the computer.

Steps:

- Insert the microSD card into the appropriate slot

- Connect the display and USB keyboard /mouse and Ethernet cable.

- Depending on the power supply you want to use, you may have to add or remove the jumper for power selection:

– If using a jack(part 8), the jumper must be set.

– if using USB (part 3), the jumper must be off. - Plug in the power supply. The green LED (D53) close to the micro USB port should turn green, and the display should show the NVIDIA logo before booting begins.

Prepare Nano System

- Jetson Nano L4T 32.2.1-20190812212815 (JetPack 4.2.2)

- nv-jetson-nano-sd-card-image-r32.2.1.zip

- DeepStream SDK 4.0.1 (gstreamer1.0)

- Jetson Nano L4T 32.3-20191217(JetPack 4.3)

- nv-jetson-nano-sd-card-image-r32.3.1.zip

- DeepStream SDK 4.0.2 (gstreamer1.0)

- Ubuntu 18.04 aarch64 (bionic)

- CUDA 10.0 SM_72 (installed)

- TensorRT-5.1.6.0 (installed)

- OpenCV 3.3.1 (installed)

- Python 2.7 (installed)

- Python 3.6.9

- Numpy 1.13.3

- QT 5.9.5

CUDA-10.0andTensorRT-5.1.6.0has already installed on Jetson Nano.

ARM 64 machines such as the Jetson Nano and Raspberry Pi.

DeepStream SDK 4.0.1requires the installation ofJetPack 4.2.2.DeepStream SDK 4.0.2requires the installation ofJetPack 4.3.

SSH for nano with ForwardX11

for nano, edit /etc/ssh/ssh_config

X11Forwarding yes

and restart ssh

$ sudo /etc/init.d/ssh restart

[sudo] password for nano:

[ ok ] Restarting ssh (via systemctl): ssh.service.

for my ubuntu client

edit ~/.ssh/config

Host nano

HostName 192.168.0.63

User nano

ForwardX11 yes

ForwardX11 yes

# method 1: `~/.ssh/config` with `ForwardX11 yes`

$ ssh [email protected]

# method 2: with `-X`

$ ssh -X [email protected]

# `-X` means enabling ForwardX11

add CUDA envs

edit ~.bashrc

# Add this to your .bashrc file

export CUDA_HOME=/usr/local/cuda

# Adds the CUDA compiler to the PATH

export PATH=$CUDA_HOME/bin:$PATH

# Adds the libraries

export LD_LIBRARY_PATH=$CUDA_HOME/lib64:$LD_LIBRARY_PATH

check cuda version

$ source ~/.bashrc

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Mon_Mar_11_22:13:24_CDT_2019

Cuda compilation tools, release 10.0, V10.0.326

check versions

$ uname -a

Linux nano-desktop 4.9.140-tegra #1 SMP PREEMPT Sat Oct 19 15:54:06 PDT 2019 aarch64 aarch64 aarch64 GNU/Linux

$ dpkg-query --show nvidia-l4t-core

nvidia-l4t-core 32.2.1-20190812212815

$ python --version

Python 2.7.15+

$ git --version

git version 2.17.1

# check tensorrt version

$ ll -al /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.5

lrwxrwxrwx 1 root root 26 Jun 5 2019 /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.5 -> libnvinfer_plugin.so.5.1.6

NO NEED TO download

TensorRT 5.1.5.0 GA for Ubuntu 18.04 and CUDA 10.0 tar packagefrom here and place at/opt/TensorRT-5.1.5.0

install packages

- cmake 3.10.2

- cmake-gui 3.10.2

- python 3.6.9

- QT 5.9.5

use official ubuntu source

do not replace/etc/opt/sources.listwith aliyun source,otherwise lots of packages will fail to install

# update

$ sudo apt-get update

# install cmake

$ sudo apt-get install cmake

Get:1 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 cmake arm64 3.10.2-1ubuntu2.18.04.1 [2,971 kB]

# install cmake-gui

$ sudo apt-get install cmake-gui cmake-qt-gui

Get:1 http://ports.ubuntu.com/ubuntu-ports bionic-updates/universe arm64 cmake-qt-gui arm64 3.10.2-1ubuntu2.18.04.1 [1,527 kB]

# install python 3.6.9

$ sudo apt -y install libpython3-dev python3-numpy

Get:1 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libpython3.6 arm64 3.6.9-1~18.04 [1,307 kB]

Get:2 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 python3.6 arm64 3.6.9-1~18.04 [203 kB]

Get:3 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libpython3.6-stdlib arm64 3.6.9-1~18.04 [1,609 kB]

Get:4 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 python3.6-minimal arm64 3.6.9-1~18.04 [1,327 kB]

Get:5 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libpython3.6-minimal arm64 3.6.9-1~18.04 [528 kB]

Get:6 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libpython3.6-dev arm64 3.6.9-1~18.04 [45.0 MB]

Get:7 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libpython3-dev arm64 3.6.7-1~18.04 [7,328 B]

Get:8 http://ports.ubuntu.com/ubuntu-ports bionic/main arm64 python3-numpy arm64 1:1.13.3-2ubuntu1 [1,734 kB]

# install qt5

$ sudo apt-get install qtbase5-dev

Get:1 http://ports.ubuntu.com/ubuntu-ports bionic-updates/main arm64 libqt5core5a arm64 5.9.5+dfsg-0ubuntu2.4 [1933 kB]

packages conclusion

sudo apt -y install cmake cmake-gui cmake-qt-gui

sudo apt -y install libpython3-dev python3-numpy python3-pip

sudo apt -y install qtbase5-dev

# other packages for c++ programs

sudo apt -y install libcrypto++-dev

sudo apt -y install libgoogle-glog-dev

sudo apt -y install libgflags-dev

sudo apt -y install --no-install-recommends libboost-all-dev

# top and jtop

sudo -H pip3 install jetson-stats

sudo jtop -h

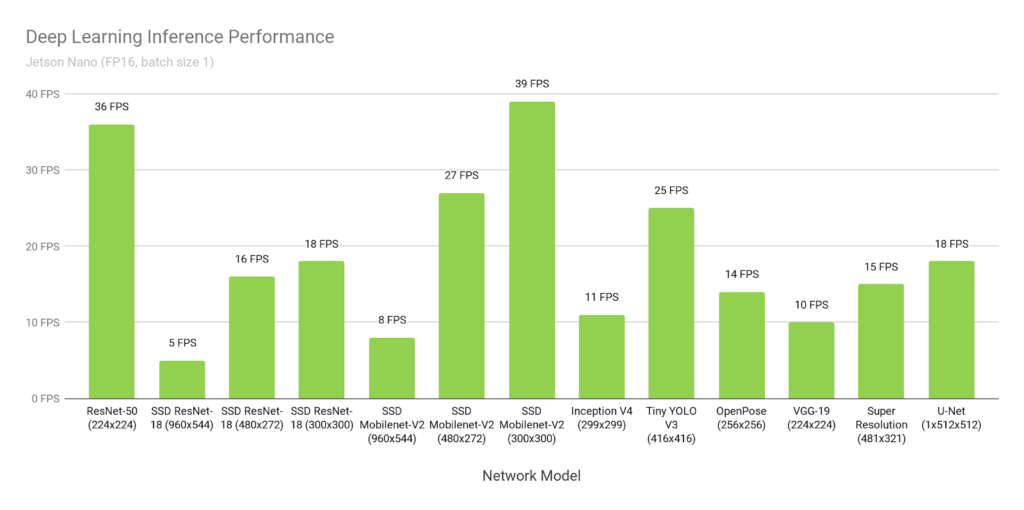

Build jetson-inference

see jetson-inference

cmake and configure

git clone --recursive https://github.com/dusty-nv/jetson-inference

cd jetson-inference

git submodule update --init

mkdir build

cd build

sudo cmake ..

congigure

do not donwload models

do not download pytorch

run download models and pytorch later if you really need

$ cd jetson-inference/tools

$ ./download-models.sh

$ cd jetson-inference/build

$ ./install-pytorch.sh

or download models from here

wget -b -c https://github.com/dusty-nv/jetson-inference/releases/download/model-mirror-190618/ResNet-18.tar.gz

congigure output

[jetson-inference] Checking for 'dialog' deb package...installed

[jetson-inference] FOUND_DIALOG=INSTALLED

[jetson-inference] Model selection status: 0

[jetson-inference] No models were selected for download.

[jetson-inference] to run this tool again, use the following commands:

$ cd <jetson-inference>/tools

$ ./download-models.sh

[jetson-inference] Checking for 'dialog' deb package...installed

[jetson-inference] FOUND_DIALOG=INSTALLED

head: cannot open '/etc/nv_tegra_release' for reading: No such file or directory

[jetson-inference] reading L4T version from "dpkg-query --show nvidia-l4t-core"

[jetson-inference] Jetson BSP Version: L4T R32.2

[jetson-inference] Package selection status: 1

[jetson-inference] Package selection cancelled.

[jetson-inference] installation complete, exiting with status code 0

[jetson-inference] to run this tool again, use the following commands:

$ cd <jetson-inference>/build

$ ./install-pytorch.sh

[Pre-build] Finished CMakePreBuild script

-- Finished installing dependencies

-- using patched FindCUDA.cmake

Looking for pthread.h

Looking for pthread.h - found

Looking for pthread_create

Looking for pthread_create - not found

Looking for pthread_create in pthreads

Looking for pthread_create in pthreads - not found

Looking for pthread_create in pthread

Looking for pthread_create in pthread - found

Found Threads: TRUE

-- using patched FindCUDA.cmake

-- CUDA version: 10.0

-- CUDA 10 detected, enabling SM_72

-- OpenCV version: 3.3.1

-- OpenCV version >= 3.0.0, enabling OpenCV

-- system arch: aarch64

-- output path: /home/nano/git/jetson-inference/build/aarch64

-- Copying /home/nano/git/jetson-inference/c/detectNet.h

-- Copying /home/nano/git/jetson-inference/c/homographyNet.h

-- Copying /home/nano/git/jetson-inference/c/imageNet.h

-- Copying /home/nano/git/jetson-inference/c/segNet.h

-- Copying /home/nano/git/jetson-inference/c/superResNet.h

-- Copying /home/nano/git/jetson-inference/c/tensorNet.h

-- Copying /home/nano/git/jetson-inference/c/imageNet.cuh

-- Copying /home/nano/git/jetson-inference/calibration/randInt8Calibrator.h

Could NOT find Doxygen (missing: DOXYGEN_EXECUTABLE)

-- found Qt5Widgets version: 5.9.5

-- found Qt5Widgets defines: -DQT_WIDGETS_LIB;-DQT_GUI_LIB;-DQT_CORE_LIB

-- found Qt5Widgets library: Qt5::Widgets

-- found Qt5Widgets include: /usr/include/aarch64-linux-gnu/qt5/;/usr/include/aarch64-linux-gnu/qt5/QtWidgets;/usr/include/aarch64-linux-gnu/qt5/QtGui;/usr/include/aarch64-linux-gnu/qt5/QtCore;/usr/lib/aarch64-linux-gnu/qt5//mkspecs/linux-g++

-- camera-capture: building as submodule, /home/nano/git/jetson-inference/tools

-- jetson-utils: building as submodule, /home/nano/git/jetson-inference

-- Copying /home/nano/git/jetson-inference/utils/XML.h

-- Copying /home/nano/git/jetson-inference/utils/commandLine.h

-- Copying /home/nano/git/jetson-inference/utils/filesystem.h

-- Copying /home/nano/git/jetson-inference/utils/mat33.h

-- Copying /home/nano/git/jetson-inference/utils/pi.h

-- Copying /home/nano/git/jetson-inference/utils/rand.h

-- Copying /home/nano/git/jetson-inference/utils/timespec.h

-- Copying /home/nano/git/jetson-inference/utils/camera/gstCamera.h

-- Copying /home/nano/git/jetson-inference/utils/camera/v4l2Camera.h

-- Copying /home/nano/git/jetson-inference/utils/codec/gstDecoder.h

-- Copying /home/nano/git/jetson-inference/utils/codec/gstEncoder.h

-- Copying /home/nano/git/jetson-inference/utils/codec/gstUtility.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaFont.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaMappedMemory.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaNormalize.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaOverlay.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaRGB.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaResize.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaUtility.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaWarp.h

-- Copying /home/nano/git/jetson-inference/utils/cuda/cudaYUV.h

-- Copying /home/nano/git/jetson-inference/utils/display/glDisplay.h

-- Copying /home/nano/git/jetson-inference/utils/display/glTexture.h

-- Copying /home/nano/git/jetson-inference/utils/display/glUtility.h

-- Copying /home/nano/git/jetson-inference/utils/image/imageIO.h

-- Copying /home/nano/git/jetson-inference/utils/image/loadImage.h

-- Copying /home/nano/git/jetson-inference/utils/input/devInput.h

-- Copying /home/nano/git/jetson-inference/utils/input/devJoystick.h

-- Copying /home/nano/git/jetson-inference/utils/input/devKeyboard.h

-- Copying /home/nano/git/jetson-inference/utils/network/Endian.h

-- Copying /home/nano/git/jetson-inference/utils/network/IPv4.h

-- Copying /home/nano/git/jetson-inference/utils/network/NetworkAdapter.h

-- Copying /home/nano/git/jetson-inference/utils/network/Socket.h

-- Copying /home/nano/git/jetson-inference/utils/threads/Event.h

-- Copying /home/nano/git/jetson-inference/utils/threads/Mutex.h

-- Copying /home/nano/git/jetson-inference/utils/threads/Process.h

-- Copying /home/nano/git/jetson-inference/utils/threads/Thread.h

-- trying to build Python bindings for Python versions: 2.7;3.6;3.7

-- detecting Python 2.7...

-- found Python version: 2.7 (2.7.15+)

-- found Python include: /usr/include/python2.7

-- found Python library: /usr/lib/aarch64-linux-gnu/libpython2.7.so

-- CMake module path: /home/nano/git/jetson-inference/utils/cuda;/home/nano/git/jetson-inference/utils/python/bindings

NumPy ver. 1.13.3 found (include: /usr/lib/python2.7/dist-packages/numpy/core/include)

-- found NumPy version: 1.13.3

-- found NumPy include: /usr/lib/python2.7/dist-packages/numpy/core/include

-- detecting Python 3.6...

-- found Python version: 3.6 (3.6.9)

-- found Python include: /usr/include/python3.6m

-- found Python library: /usr/lib/aarch64-linux-gnu/libpython3.6m.so

-- CMake module path: /home/nano/git/jetson-inference/utils/cuda;/home/nano/git/jetson-inference/utils/python/bindings

NumPy ver. 1.13.3 found (include: /usr/lib/python3/dist-packages/numpy/core/include)

-- found NumPy version: 1.13.3

-- found NumPy include: /usr/lib/python3/dist-packages/numpy/core/include

-- detecting Python 3.7...

-- Python 3.7 wasn't found

-- Copying /home/nano/git/jetson-inference/utils/python/examples/camera-viewer.py

-- Copying /home/nano/git/jetson-inference/utils/python/examples/cuda-from-numpy.py

-- Copying /home/nano/git/jetson-inference/utils/python/examples/cuda-to-numpy.py

-- Copying /home/nano/git/jetson-inference/utils/python/examples/gl-display-test.py

-- trying to build Python bindings for Python versions: 2.7;3.6;3.7

-- detecting Python 2.7...

-- found Python version: 2.7 (2.7.15+)

-- found Python include: /usr/include/python2.7

-- found Python library: /usr/lib/aarch64-linux-gnu/libpython2.7.so

-- detecting Python 3.6...

-- found Python version: 3.6 (3.6.9)

-- found Python include: /usr/include/python3.6m

-- found Python library: /usr/lib/aarch64-linux-gnu/libpython3.6m.so

-- detecting Python 3.7...

-- Python 3.7 wasn't found

-- Copying /home/nano/git/jetson-inference/python/examples/detectnet-camera.py

-- Copying /home/nano/git/jetson-inference/python/examples/detectnet-console.py

-- Copying /home/nano/git/jetson-inference/python/examples/imagenet-camera.py

-- Copying /home/nano/git/jetson-inference/python/examples/imagenet-console.py

-- Copying /home/nano/git/jetson-inference/python/examples/my-detection.py

-- Copying /home/nano/git/jetson-inference/python/examples/my-recognition.py

-- Copying /home/nano/git/jetson-inference/python/examples/segnet-batch.py

-- Copying /home/nano/git/jetson-inference/python/examples/segnet-camera.py

-- Copying /home/nano/git/jetson-inference/python/examples/segnet-console.py

Configuring done

compile and install

generate and compile

sudo make

install jetson-inference

sudo make install

sudo ldconfig

output

[ 1%] Linking CXX shared library ../aarch64/lib/libjetson-utils.so

[ 31%] Built target jetson-utils

[ 32%] Linking CXX shared library aarch64/lib/libjetson-inference.so

[ 43%] Built target jetson-inference

[ 44%] Linking CXX executable ../../aarch64/bin/imagenet-console

[ 45%] Built target imagenet-console

[ 46%] Linking CXX executable ../../aarch64/bin/imagenet-camera

[ 47%] Built target imagenet-camera

[ 47%] Linking CXX executable ../../aarch64/bin/detectnet-console

[ 48%] Built target detectnet-console

[ 49%] Linking CXX executable ../../aarch64/bin/detectnet-camera

[ 50%] Built target detectnet-camera

[ 50%] Linking CXX executable ../../aarch64/bin/segnet-console

[ 51%] Built target segnet-console

[ 52%] Linking CXX executable ../../aarch64/bin/segnet-camera

[ 53%] Built target segnet-camera

[ 54%] Linking CXX executable ../../aarch64/bin/superres-console

[ 55%] Built target superres-console

[ 56%] Linking CXX executable ../../aarch64/bin/homography-console

[ 57%] Built target homography-console

[ 58%] Linking CXX executable ../../aarch64/bin/homography-camera

[ 59%] Built target homography-camera

[ 60%] Automatic MOC for target camera-capture

[ 60%] Built target camera-capture_autogen

[ 61%] Linking CXX executable ../../aarch64/bin/camera-capture

[ 64%] Built target camera-capture

[ 65%] Linking CXX executable ../../aarch64/bin/trt-bench

[ 66%] Built target trt-bench

[ 67%] Linking CXX executable ../../aarch64/bin/trt-console

[ 68%] Built target trt-console

[ 69%] Linking CXX executable ../../../aarch64/bin/camera-viewer

[ 70%] Built target camera-viewer

[ 71%] Linking CXX executable ../../../aarch64/bin/v4l2-console

[ 72%] Built target v4l2-console

[ 73%] Linking CXX executable ../../../aarch64/bin/v4l2-display

[ 74%] Built target v4l2-display

[ 75%] Linking CXX executable ../../../aarch64/bin/gl-display-test

[ 76%] Built target gl-display-test

[ 76%] Linking CXX shared library ../../../aarch64/lib/python/2.7/jetson_utils_python.so

[ 82%] Built target jetson-utils-python-27

[ 83%] Linking CXX shared library ../../../aarch64/lib/python/3.6/jetson_utils_python.so

[ 89%] Built target jetson-utils-python-36

[ 90%] Linking CXX shared library ../../aarch64/lib/python/2.7/jetson_inference_python.so

[ 95%] Built target jetson-inference-python-27

[ 96%] Linking CXX shared library ../../aarch64/lib/python/3.6/jetson_inference_python.so

[100%] Built target jetson-inference-python-36

Install the project...

-- Install configuration: ""

-- Installing: /usr/local/include/jetson-inference/detectNet.h

-- Installing: /usr/local/include/jetson-inference/homographyNet.h

-- Installing: /usr/local/include/jetson-inference/imageNet.h

-- Installing: /usr/local/include/jetson-inference/segNet.h

-- Installing: /usr/local/include/jetson-inference/superResNet.h

-- Installing: /usr/local/include/jetson-inference/tensorNet.h

-- Installing: /usr/local/include/jetson-inference/imageNet.cuh

-- Installing: /usr/local/include/jetson-inference/randInt8Calibrator.h

-- Installing: /usr/local/lib/libjetson-inference.so

-- Set runtime path of "/usr/local/lib/libjetson-inference.so" to ""

-- Installing: /usr/local/share/jetson-inference/cmake/jetson-inferenceConfig.cmake

-- Installing: /usr/local/share/jetson-inference/cmake/jetson-inferenceConfig-noconfig.cmake

-- Installing: /usr/local/bin/imagenet-console

-- Set runtime path of "/usr/local/bin/imagenet-console" to ""

-- Installing: /usr/local/bin/imagenet-camera

-- Set runtime path of "/usr/local/bin/imagenet-camera" to ""

-- Installing: /usr/local/bin/detectnet-console

-- Set runtime path of "/usr/local/bin/detectnet-console" to ""

-- Installing: /usr/local/bin/detectnet-camera

-- Set runtime path of "/usr/local/bin/detectnet-camera" to ""

-- Installing: /usr/local/bin/segnet-console

-- Set runtime path of "/usr/local/bin/segnet-console" to ""

-- Installing: /usr/local/bin/segnet-camera

-- Set runtime path of "/usr/local/bin/segnet-camera" to ""

-- Installing: /usr/local/bin/superres-console

-- Set runtime path of "/usr/local/bin/superres-console" to ""

-- Installing: /usr/local/bin/homography-console

-- Set runtime path of "/usr/local/bin/homography-console" to ""

-- Installing: /usr/local/bin/homography-camera

-- Set runtime path of "/usr/local/bin/homography-camera" to ""

-- Installing: /usr/local/bin/camera-capture

-- Set runtime path of "/usr/local/bin/camera-capture" to ""

-- Installing: /usr/local/include/jetson-utils/XML.h

-- Installing: /usr/local/include/jetson-utils/commandLine.h

-- Installing: /usr/local/include/jetson-utils/filesystem.h

-- Installing: /usr/local/include/jetson-utils/mat33.h

-- Installing: /usr/local/include/jetson-utils/pi.h

-- Installing: /usr/local/include/jetson-utils/rand.h

-- Installing: /usr/local/include/jetson-utils/timespec.h

-- Installing: /usr/local/include/jetson-utils/gstCamera.h

-- Installing: /usr/local/include/jetson-utils/v4l2Camera.h

-- Installing: /usr/local/include/jetson-utils/gstDecoder.h

-- Installing: /usr/local/include/jetson-utils/gstEncoder.h

-- Installing: /usr/local/include/jetson-utils/gstUtility.h

-- Installing: /usr/local/include/jetson-utils/cudaFont.h

-- Installing: /usr/local/include/jetson-utils/cudaMappedMemory.h

-- Installing: /usr/local/include/jetson-utils/cudaNormalize.h

-- Installing: /usr/local/include/jetson-utils/cudaOverlay.h

-- Installing: /usr/local/include/jetson-utils/cudaRGB.h

-- Installing: /usr/local/include/jetson-utils/cudaResize.h

-- Installing: /usr/local/include/jetson-utils/cudaUtility.h

-- Installing: /usr/local/include/jetson-utils/cudaWarp.h

-- Installing: /usr/local/include/jetson-utils/cudaYUV.h

-- Installing: /usr/local/include/jetson-utils/glDisplay.h

-- Installing: /usr/local/include/jetson-utils/glTexture.h

-- Installing: /usr/local/include/jetson-utils/glUtility.h

-- Installing: /usr/local/include/jetson-utils/imageIO.h

-- Installing: /usr/local/include/jetson-utils/loadImage.h

-- Installing: /usr/local/include/jetson-utils/devInput.h

-- Installing: /usr/local/include/jetson-utils/devJoystick.h

-- Installing: /usr/local/include/jetson-utils/devKeyboard.h

-- Installing: /usr/local/include/jetson-utils/Endian.h

-- Installing: /usr/local/include/jetson-utils/IPv4.h

-- Installing: /usr/local/include/jetson-utils/NetworkAdapter.h

-- Installing: /usr/local/include/jetson-utils/Socket.h

-- Installing: /usr/local/include/jetson-utils/Event.h

-- Installing: /usr/local/include/jetson-utils/Mutex.h

-- Installing: /usr/local/include/jetson-utils/Process.h

-- Installing: /usr/local/include/jetson-utils/Thread.h

-- Installing: /usr/local/lib/libjetson-utils.so

-- Installing: /usr/local/share/jetson-utils/cmake/jetson-utilsConfig.cmake

-- Installing: /usr/local/share/jetson-utils/cmake/jetson-utilsConfig-noconfig.cmake

-- Installing: /usr/local/bin/camera-viewer

-- Set runtime path of "/usr/local/bin/camera-viewer" to ""

-- Installing: /usr/local/bin/gl-display-test

-- Set runtime path of "/usr/local/bin/gl-display-test" to ""

-- Installing: /usr/local/bin/camera-viewer.py

-- Installing: /usr/local/bin/cuda-from-numpy.py

-- Installing: /usr/local/bin/cuda-to-numpy.py

-- Installing: /usr/local/bin/gl-display-test.py

-- Installing: /usr/lib/python2.7/dist-packages/jetson_utils_python.so

-- Set runtime path of "/usr/lib/python2.7/dist-packages/jetson_utils_python.so" to ""

-- Installing: /usr/lib/python2.7/dist-packages/Jetson

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/Utils

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/Utils/__init__.py

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/__init__.py

-- Installing: /usr/lib/python2.7/dist-packages/jetson

-- Installing: /usr/lib/python2.7/dist-packages/jetson/utils

-- Installing: /usr/lib/python2.7/dist-packages/jetson/utils/__init__.py

-- Installing: /usr/lib/python2.7/dist-packages/jetson/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/jetson_utils_python.so

-- Set runtime path of "/usr/lib/python3.6/dist-packages/jetson_utils_python.so" to ""

-- Installing: /usr/lib/python3.6/dist-packages/Jetson

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/Utils

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/Utils/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/jetson

-- Installing: /usr/lib/python3.6/dist-packages/jetson/utils

-- Installing: /usr/lib/python3.6/dist-packages/jetson/utils/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/jetson/__init__.py

-- Installing: /usr/local/bin/detectnet-camera.py

-- Installing: /usr/local/bin/detectnet-console.py

-- Installing: /usr/local/bin/imagenet-camera.py

-- Installing: /usr/local/bin/imagenet-console.py

-- Installing: /usr/local/bin/my-detection.py

-- Installing: /usr/local/bin/my-recognition.py

-- Installing: /usr/local/bin/segnet-batch.py

-- Installing: /usr/local/bin/segnet-camera.py

-- Installing: /usr/local/bin/segnet-console.py

-- Installing: /usr/lib/python2.7/dist-packages/jetson_inference_python.so

-- Set runtime path of "/usr/lib/python2.7/dist-packages/jetson_inference_python.so" to ""

-- Up-to-date: /usr/lib/python2.7/dist-packages/Jetson

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/__init__.py

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/Inference

-- Installing: /usr/lib/python2.7/dist-packages/Jetson/Inference/__init__.py

-- Up-to-date: /usr/lib/python2.7/dist-packages/jetson

-- Installing: /usr/lib/python2.7/dist-packages/jetson/__init__.py

-- Installing: /usr/lib/python2.7/dist-packages/jetson/inference

-- Installing: /usr/lib/python2.7/dist-packages/jetson/inference/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/jetson_inference_python.so

-- Set runtime path of "/usr/lib/python3.6/dist-packages/jetson_inference_python.so" to ""

-- Up-to-date: /usr/lib/python3.6/dist-packages/Jetson

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/Inference

-- Installing: /usr/lib/python3.6/dist-packages/Jetson/Inference/__init__.py

-- Up-to-date: /usr/lib/python3.6/dist-packages/jetson

-- Installing: /usr/lib/python3.6/dist-packages/jetson/__init__.py

-- Installing: /usr/lib/python3.6/dist-packages/jetson/inference

-- Installing: /usr/lib/python3.6/dist-packages/jetson/inference/__init__.py

The project will be built to jetson-inference/build/aarch64, with the following directory structure:

|-build

\aarch64

\bin where the sample binaries are built to

\networks where the network models are stored

\images where the test images are stored

\include where the headers reside

\lib where the libraries are build to

These also get installed under

/usr/local/

The Python bindings for thejetson.inferenceandjetson.utilsmodules also get installed under/usr/lib/python*/dist-packages/.

view libjetson-utils and libjetson-inference in lib

$ tree build/aarch64/lib

.

├── libjetson-inference.so

├── libjetson-utils.so

└── python

├── 2.7

│ ├── jetson_inference_python.so

│ └── jetson_utils_python.so

└── 3.6

├── jetson_inference_python.so

└── jetson_utils_python.so

3 directories, 6 files

libjetson-inference.so

$ ldd libjetson-inference.so

linux-vdso.so.1 (0x0000007fa6e8c000)

libpthread.so.0 => /lib/aarch64-linux-gnu/libpthread.so.0 (0x0000007fa6cf3000)

libdl.so.2 => /lib/aarch64-linux-gnu/libdl.so.2 (0x0000007fa6cde000)

librt.so.1 => /lib/aarch64-linux-gnu/librt.so.1 (0x0000007fa6cc7000)

libjetson-utils.so => /home/nano/git/jetson-inference/build/aarch64/lib/libjetson-utils.so (0x0000007fa6b6f000)

libnvinfer.so.5 => /usr/lib/aarch64-linux-gnu/libnvinfer.so.5 (0x0000007f9dc23000)

libnvinfer_plugin.so.5 => /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.5 (0x0000007f9d94d000)

libnvparsers.so.5 => /usr/lib/aarch64-linux-gnu/libnvparsers.so.5 (0x0000007f9d60e000)

libnvonnxparser.so.0 => /usr/lib/aarch64-linux-gnu/libnvonnxparser.so.0 (0x0000007f9d1ea000)

libopencv_calib3d.so.3.3 => /usr/lib/libopencv_calib3d.so.3.3 (0x0000007f9d0be000)

libopencv_core.so.3.3 => /usr/lib/libopencv_core.so.3.3 (0x0000007f9cde9000)

libstdc++.so.6 => /usr/lib/aarch64-linux-gnu/libstdc++.so.6 (0x0000007f9cc56000)

libm.so.6 => /lib/aarch64-linux-gnu/libm.so.6 (0x0000007f9cb9c000)

libgcc_s.so.1 => /lib/aarch64-linux-gnu/libgcc_s.so.1 (0x0000007f9cb78000)

libc.so.6 => /lib/aarch64-linux-gnu/libc.so.6 (0x0000007f9ca1f000)

/lib/ld-linux-aarch64.so.1 (0x0000007fa6e61000)

libGL.so.1 => /usr/lib/aarch64-linux-gnu/libGL.so.1 (0x0000007f9c920000)

libGLEW.so.2.0 => /usr/lib/aarch64-linux-gnu/libGLEW.so.2.0 (0x0000007f9c874000)

libgstreamer-1.0.so.0 => /usr/lib/aarch64-linux-gnu/libgstreamer-1.0.so.0 (0x0000007f9c744000)

libgstapp-1.0.so.0 => /usr/lib/aarch64-linux-gnu/libgstapp-1.0.so.0 (0x0000007f9c726000)

libcudnn.so.7 => /usr/lib/aarch64-linux-gnu/libcudnn.so.7 (0x0000007f858c0000)

libcublas.so.10.0 => /usr/local/cuda-10.0/targets/aarch64-linux/lib/libcublas.so.10.0 (0x0000007f7ff59000)

libcudart.so.10.0 => /usr/local/cuda-10.0/targets/aarch64-linux/lib/libcudart.so.10.0 (0x0000007f7fee8000)

libopencv_flann.so.3.3 => /usr/lib/libopencv_flann.so.3.3 (0x0000007f7fe85000)

libopencv_imgproc.so.3.3 => /usr/lib/libopencv_imgproc.so.3.3 (0x0000007f7f6b8000)

imageNet demo

C++

$ cd etson-inference/build/aarch64/bin

$ sudo ./imagenet-console --network=resnet-18 images/orange_0.jpg output_0.jpg

output

imageNet -- loading classification network model from:

-- prototxt networks/ResNet-18/deploy.prototxt

-- model networks/ResNet-18/ResNet-18.caffemodel

-- class_labels networks/ilsvrc12_synset_words.txt

-- input_blob 'data'

-- output_blob 'prob'

-- batch_size 1

[TRT] TensorRT version 5.1.6

[TRT] loading NVIDIA plugins...

[TRT] Plugin Creator registration succeeded - GridAnchor_TRT

[TRT] Plugin Creator registration succeeded - NMS_TRT

[TRT] Plugin Creator registration succeeded - Reorg_TRT

[TRT] Plugin Creator registration succeeded - Region_TRT

[TRT] Plugin Creator registration succeeded - Clip_TRT

[TRT] Plugin Creator registration succeeded - LReLU_TRT

[TRT] Plugin Creator registration succeeded - PriorBox_TRT

[TRT] Plugin Creator registration succeeded - Normalize_TRT

[TRT] Plugin Creator registration succeeded - RPROI_TRT

[TRT] Plugin Creator registration succeeded - BatchedNMS_TRT

[TRT] completed loading NVIDIA plugins.

[TRT] detected model format - caffe (extension '.caffemodel')

[TRT] desired precision specified for GPU: FASTEST

[TRT] requested fasted precision for device GPU without providing valid calibrator, disabling INT8

[TRT] native precisions detected for GPU: FP32, FP16

[TRT] selecting fastest native precision for GPU: FP16

[TRT] attempting to open engine cache file networks/ResNet-18/ResNet-18.caffemodel.1.1.GPU.FP16.engine

[TRT] cache file not found, profiling network model on device GPU

[TRT] device GPU, loading networks/ResNet-18/deploy.prototxt networks/ResNet-18/ResNet-18.caffemodel

[TRT] retrieved Output tensor "prob": 1000x1x1

[TRT] retrieved Input tensor "data": 3x224x224

[TRT] device GPU, configuring CUDA engine

[TRT] device GPU, building FP16: ON

[TRT] device GPU, building INT8: OFF

[TRT] device GPU, building CUDA engine (this may take a few minutes the first time a network is loaded)

[TRT] device GPU, completed building CUDA engine

[TRT] network profiling complete, writing engine cache to networks/ResNet-18/ResNet-18.caffemodel.1.1.GPU.FP16.engine

[TRT] device GPU, completed writing engine cache to networks/ResNet-18/ResNet-18.caffemodel.1.1.GPU.FP16.engine

[TRT] device GPU, networks/ResNet-18/ResNet-18.caffemodel loaded

[TRT] device GPU, CUDA engine context initialized with 2 bindings

[TRT] binding -- index 0

-- name 'data'

-- type FP32

-- in/out INPUT

-- # dims 3

-- dim #0 3 (CHANNEL)

-- dim #1 224 (SPATIAL)

-- dim #2 224 (SPATIAL)

[TRT] binding -- index 1

-- name 'prob'

-- type FP32

-- in/out OUTPUT

-- # dims 3

-- dim #0 1000 (CHANNEL)

-- dim #1 1 (SPATIAL)

-- dim #2 1 (SPATIAL)

[TRT] binding to input 0 data binding index: 0

[TRT] binding to input 0 data dims (b=1 c=3 h=224 w=224) size=602112

[TRT] binding to output 0 prob binding index: 1

[TRT] binding to output 0 prob dims (b=1 c=1000 h=1 w=1) size=4000

device GPU, networks/ResNet-18/ResNet-18.caffemodel initialized.

[TRT] networks/ResNet-18/ResNet-18.caffemodel loaded

imageNet -- loaded 1000 class info entries

networks/ResNet-18/ResNet-18.caffemodel initialized.

[image] loaded 'images/orange_0.jpg' (1920 x 1920, 3 channels)

class 0950 - 0.996028 (orange)

imagenet-console: 'images/orange_0.jpg' -> 99.60276% class #950 (orange)

[TRT] ------------------------------------------------

[TRT] Timing Report networks/ResNet-18/ResNet-18.caffemodel

[TRT] ------------------------------------------------

[TRT] Pre-Process CPU 0.10824ms CUDA 0.34156ms

[TRT] Network CPU 12.91854ms CUDA 12.47026ms

[TRT] Post-Process CPU 0.80311ms CUDA 0.82672ms

[TRT] Total CPU 13.82989ms CUDA 13.63854ms

[TRT] ------------------------------------------------

[TRT] note -- when processing a single image, run 'sudo jetson_clocks' before

to disable DVFS for more accurate profiling/timing measurements

imagenet-console: attempting to save output image to 'output_0.jpg'

imagenet-console: completed saving 'output_0.jpg'

imagenet-console: shutting down...

imagenet-console: shutdown complete

Python

$ cd etson-inference/build/aarch64/bin

$ sudo ./imagenet-console.py --network=resnet-18 images/orange_0.jpg output_0.jpg

output

jetson.inference.__init__.py

jetson.inference -- initializing Python 2.7 bindings...

jetson.inference -- registering module types...

jetson.inference -- done registering module types

jetson.inference -- done Python 2.7 binding initialization

jetson.utils.__init__.py

jetson.utils -- initializing Python 2.7 bindings...

jetson.utils -- registering module functions...

jetson.utils -- done registering module functions

jetson.utils -- registering module types...

jetson.utils -- done registering module types

jetson.utils -- done Python 2.7 binding initialization

[image] loaded 'images/orange_0.jpg' (1920 x 1920, 3 channels)

jetson.inference -- PyTensorNet_New()

jetson.inference -- PyImageNet_Init()

jetson.inference -- imageNet loading network using argv command line params

jetson.inference -- imageNet.__init__() argv[0] = './imagenet-console.py'

jetson.inference -- imageNet.__init__() argv[1] = '--network=resnet-18'

jetson.inference -- imageNet.__init__() argv[2] = 'images/orange_0.jpg'

jetson.inference -- imageNet.__init__() argv[3] = 'output_0.jpg'

imageNet -- loading classification network model from:

-- prototxt networks/ResNet-18/deploy.prototxt

-- model networks/ResNet-18/ResNet-18.caffemodel

-- class_labels networks/ilsvrc12_synset_words.txt

-- input_blob 'data'

-- output_blob 'prob'

-- batch_size 1

[TRT] TensorRT version 5.1.6

[TRT] loading NVIDIA plugins...

[TRT] Plugin Creator registration succeeded - GridAnchor_TRT

[TRT] Plugin Creator registration succeeded - NMS_TRT

[TRT] Plugin Creator registration succeeded - Reorg_TRT

[TRT] Plugin Creator registration succeeded - Region_TRT

[TRT] Plugin Creator registration succeeded - Clip_TRT

[TRT] Plugin Creator registration succeeded - LReLU_TRT

[TRT] Plugin Creator registration succeeded - PriorBox_TRT

[TRT] Plugin Creator registration succeeded - Normalize_TRT

[TRT] Plugin Creator registration succeeded - RPROI_TRT

[TRT] Plugin Creator registration succeeded - BatchedNMS_TRT

[TRT] completed loading NVIDIA plugins.

[TRT] detected model format - caffe (extension '.caffemodel')

[TRT] desired precision specified for GPU: FASTEST

[TRT] requested fasted precision for device GPU without providing valid calibrator, disabling INT8

[TRT] native precisions detected for GPU: FP32, FP16

[TRT] selecting fastest native precision for GPU: FP16

[TRT] attempting to open engine cache file networks/ResNet-18/ResNet-18.caffemodel.1.1.GPU.FP16.engine

[TRT] loading network profile from engine cache... networks/ResNet-18/ResNet-18.caffemodel.1.1.GPU.FP16.engine

[TRT] device GPU, networks/ResNet-18/ResNet-18.caffemodel loaded

[TRT] device GPU, CUDA engine context initialized with 2 bindings

[TRT] binding -- index 0

-- name 'data'

-- type FP32

-- in/out INPUT

-- # dims 3

-- dim #0 3 (CHANNEL)

-- dim #1 224 (SPATIAL)

-- dim #2 224 (SPATIAL)

[TRT] binding -- index 1

-- name 'prob'

-- type FP32

-- in/out OUTPUT

-- # dims 3

-- dim #0 1000 (CHANNEL)

-- dim #1 1 (SPATIAL)

-- dim #2 1 (SPATIAL)

[TRT] binding to input 0 data binding index: 0

[TRT] binding to input 0 data dims (b=1 c=3 h=224 w=224) size=602112

[TRT] binding to output 0 prob binding index: 1

[TRT] binding to output 0 prob dims (b=1 c=1000 h=1 w=1) size=4000

device GPU, networks/ResNet-18/ResNet-18.caffemodel initialized.

[TRT] networks/ResNet-18/ResNet-18.caffemodel loaded

imageNet -- loaded 1000 class info entries

networks/ResNet-18/ResNet-18.caffemodel initialized.

class 0950 - 0.996028 (orange)

image is recognized as 'orange' (class #950) with 99.602759% confidence

[TRT] ------------------------------------------------

[TRT] Timing Report networks/ResNet-18/ResNet-18.caffemodel

[TRT] ------------------------------------------------

[TRT] Pre-Process CPU 0.06884ms CUDA 0.32849ms

[TRT] Network CPU 11.44888ms CUDA 11.01536ms

[TRT] Post-Process CPU 0.20783ms CUDA 0.20708ms

[TRT] Total CPU 11.72555ms CUDA 11.55094ms

[TRT] ------------------------------------------------

[TRT] note -- when processing a single image, run 'sudo jetson_clocks' before

to disable DVFS for more accurate profiling/timing measurements

jetson.utils -- PyFont_New()

jetson.utils -- PyFont_Init()

jetson.utils -- PyFont_Dealloc()

jetson.utils -- freeing CUDA mapped memory

PyTensorNet_Dealloc()

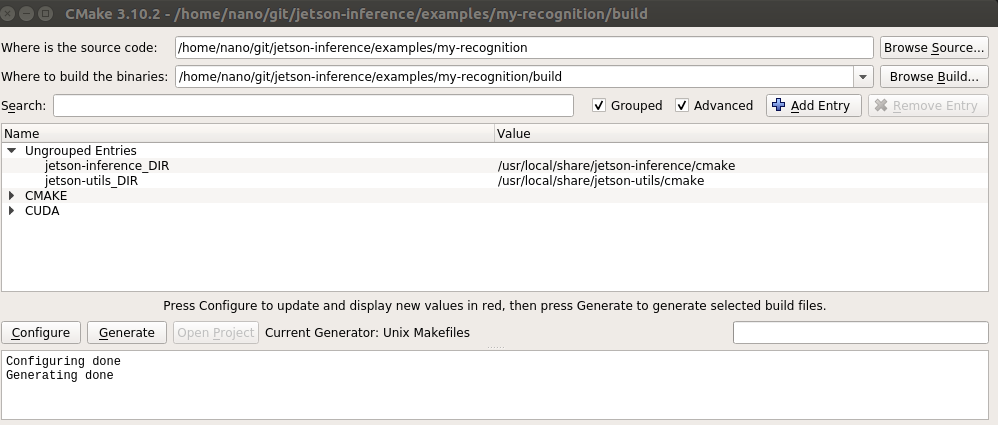

my-recognition

# build

$ cd jetson-inference/examples/my-recognition

$ mkdir build && cd build && cmake-gui ..

# compile

$ make

Scanning dependencies of target my-recognition

[ 50%] Building CXX object CMakeFiles/my-recognition.dir/my-recognition.cpp.o

[100%] Linking CXX executable my-recognition

[100%] Built target my-recognition

# view libraries

$ ldd my-recognition

linux-vdso.so.1 (0x0000007fb5546000)

libjetson-inference.so => /usr/local/lib/libjetson-inference.so (0x0000007fb53ea000)

libjetson-utils.so => /usr/local/lib/libjetson-utils.so (0x0000007fb5292000)

libstdc++.so.6 => /usr/lib/aarch64-linux-gnu/libstdc++.so.6 (0x0000007fb50db000)

libc.so.6 => /lib/aarch64-linux-gnu/libc.so.6 (0x0000007fb4f82000)

/lib/ld-linux-aarch64.so.1 (0x0000007fb551b000)

libpthread.so.0 => /lib/aarch64-linux-gnu/libpthread.so.0 (0x0000007fb4f56000)

libdl.so.2 => /lib/aarch64-linux-gnu/libdl.so.2 (0x0000007fb4f41000)

librt.so.1 => /lib/aarch64-linux-gnu/librt.so.1 (0x0000007fb4f2a000)

libnvinfer.so.5 => /usr/lib/aarch64-linux-gnu/libnvinfer.so.5 (0x0000007fabfde000)

libnvinfer_plugin.so.5 => /usr/lib/aarch64-linux-gnu/libnvinfer_plugin.so.5 (0x0000007fabd08000)

libnvparsers.so.5 => /usr/lib/aarch64-linux-gnu/libnvparsers.so.5 (0x0000007fab9c9000)

libnvonnxparser.so.0 => /usr/lib/aarch64-linux-gnu/libnvonnxparser.so.0 (0x0000007fab5a5000)

libopencv_calib3d.so.3.3 => /usr/lib/libopencv_calib3d.so.3.3 (0x0000007fab479000)

libopencv_core.so.3.3 => /usr/lib/libopencv_core.so.3.3 (0x0000007fab1a4000)

run and get result

$ ./build/my-recognition polar_bear.jpg

class 0296 - 0.997434 (ice bear, polar bear, Ursus Maritimus, Thalarctos maritimus)

imagenet-camera

imagenet-camera usage

$ ./imagenet-camera --help

usage: imagenet-camera [-h] [--network NETWORK] [--camera CAMERA]

[--width WIDTH] [--height HEIGHT]

Classify a live camera stream using an image recognition DNN.

optional arguments:

--help show this help message and exit

--network NETWORK pre-trained model to load (see below for options)

--camera CAMERA index of the MIPI CSI camera to use (e.g. CSI camera 0),

or for VL42 cameras, the /dev/video device to use.

by default, MIPI CSI camera 0 will be used.

--width WIDTH desired width of camera stream (default is 1280 pixels)

--height HEIGHT desired height of camera stream (default is 720 pixels)

imageNet arguments:

--network NETWORK pre-trained model to load, one of the following:

* alexnet

* googlenet (default)

* googlenet-12

* resnet-18

* resnet-50

* resnet-101

* resnet-152

* vgg-16

* vgg-19

* inception-v4

--model MODEL path to custom model to load (caffemodel, uff, or onnx)

--prototxt PROTOTXT path to custom prototxt to load (for .caffemodel only)

--labels LABELS path to text file containing the labels for each class

--input_blob INPUT name of the input layer (default is 'data')

--output_blob OUTPUT name of the output layer (default is 'prob')

--batch_size BATCH maximum batch size (default is 1)

--profile enable layer profiling in TensorRT

camera type

MIPI CSI camerasare used by specifying the sensor index (0or1, ect.)V4L2 USB camerasare used by specifying their /dev/video node (/dev/video0,/dev/video1, ect.)The default is to use MIPI CSI sensor 0 (

--camera=0)

Query the available formats with the following commands:

$ sudo apt-get install -y v4l-utils

$ v4l2-ctl --list-formats-ext

ioctl: VIDIOC_ENUM_FMT

Index : 0

Type : Video Capture

Pixel Format: 'MJPG' (compressed)

Name : Motion-JPEG

Size: Discrete 1920x1080

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 160x120

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 176x144

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 320x240

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 352x288

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 640x360

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.033s (30.000 fps)

Index : 1

Type : Video Capture

Pixel Format: 'YUYV'

Name : YUYV 4:2:2

Size: Discrete 1920x1080

Interval: Discrete 0.200s (5.000 fps)

Size: Discrete 160x120

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 176x144

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 320x240

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 352x288

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 640x360

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 640x480

Interval: Discrete 0.033s (30.000 fps)

Size: Discrete 1280x720

Interval: Discrete 0.100s (10.000 fps)

Size: Discrete 1280x1024

Interval: Discrete 0.200s (5.000 fps)

run demo

$ ./imagenet-camera --network=resnet-18 --camera=0 --width=640 --height=480

nvpmodel

# /etc/nvpmodel.conf

sudo nvpmodel -q –-verbose # 查看当前的模式

sudo nvpmodel -p –-verbose # 打印支持的所有模式及其配置

sudo nvpmodel -m 0 # 启动最高性能,此时所有CPU均已启动,但对应的主频还不是最高的

sudo ~/jetson_clocks.sh # 开启最大频率

Use DeepStream On Jetson Nano

install DeepStream SDK

DeepStream SDK 4.0.1

DeepStream SDK 4.0.1requires the installation ofJetPack 4.2.2.

donwloaddeepstream_sdk_v4.0.1_jetson.tbz2from hereDeepStream SDK 4.0.2

DeepStream SDK 4.0.2requires the installation ofJetPack 4.3.

donwloaddeepstream_sdk_v4.0.2_jetson.tbz2ordeepstream-4.0_4.0.2-1_arm64.debfrom here

# (1) install prerequisite packages for installing the DeepStream SDK

sudo apt install \

libssl1.0.0 \

libgstreamer1.0-0 \

gstreamer1.0-tools \

gstreamer1.0-plugins-good \

gstreamer1.0-plugins-bad \

gstreamer1.0-plugins-ugly \

gstreamer1.0-libav \

libgstrtspserver-1.0-0 \

libjansson4=2.11-1

sudo apt-get install librdkafka1=0.11.3-1build1

# (1) install deepstream sdk from tar file

tar -xpvf deepstream_sdk_v4.0.2_jetson.tbz2

cd deepstream_sdk_v4.0.2_jetson

sudo tar -xvpf binaries.tbz2 -C /

sudo ./install.sh

sudo ldconfig

# (2) or install deepstream sdk from deb

sudo apt-get install ./deepstream-4.0_4.0.2-1_arm64.deb

## NOTE: sources and samples folders will be found in /opt/nvidia/deepstream/deepstream-4.0

# To boost the clocks

# After you have installed DeepStream SDK,

# run these commands on the Jetson device to boost the clocks:

sudo nvpmodel -m 0

sudo jetson_clocks

running deepstream-app

$ deepstream-app --help

Usage:

deepstream-app [OPTION?] Nvidia DeepStream Demo

Help Options:

-h, --help Show help options

--help-all Show all help options

--help-gst Show GStreamer Options

Application Options:

-v, --version Print DeepStreamSDK version

-t, --tiledtext Display Bounding box labels in tiled mode

--version-all Print DeepStreamSDK and dependencies version

-c, --cfg-file Set the config file

-i, --input-file Set the input file

deepstream-app -c <path_to_config_file>

export GST_PLUGIN_PATH=”/usr/lib/aarch64-linux-gnu/gstreamer-1.0/“

/opt/nvidia/deepstream/deepstream-4.0/sources/apps/sample_apps/deepstream-test1$ deepstream-test1-app ~/video/pengpeng.avi

cd /opt/nvidia/deepstream/deepstream-4.0/samples/configs/deepstream-app

deepstream-app -c config_infer_primary_nano.txt

# error occurs

** ERROR: <create_multi_source_bin:682>: Failed to create element 'src_bin_muxer'

** ERROR: <create_multi_source_bin:745>: create_multi_source_bin failed

** ERROR: <create_pipeline:1045>: create_pipeline failed

** ERROR: <main:632>: Failed to create pipeline

Quitting

App run failed

# solutions

rm ~/.cache/gstreamer-1.0/registry.aarch64.bin

export DISPLAY=:1

deepstream-app -c config_infer_primary_nano.txt

(deepstream-app:16051): GStreamer-CRITICAL **: 16:31:26.057: gst_element_get_static_pad: assertion 'GST_IS_ELEMENT (element)' failed

Segmentation fault (core dumped)

Reference

- twodaystoademo

- jetson-nano-dl-inference-benchmarks

- get-started-jetson-nano-devkit

- getting-started-with-jetson-nano

- jetson-nano-sd-card-image-abnormal

- getting-started-with-the-nvidia-jetson-nano

- jetson-nano-visual-studio-code-python

- installVSCode on jetson nano

- deepstream sdk

- deepstream app

History

- 2019/12/09: created.