Series

- Part 1:cpp cuda programming tutorial

- Part 2: cuda activation kernels

- Part 3: cublasSgemm for large matrix multiplication on gpu

Guide

introduction

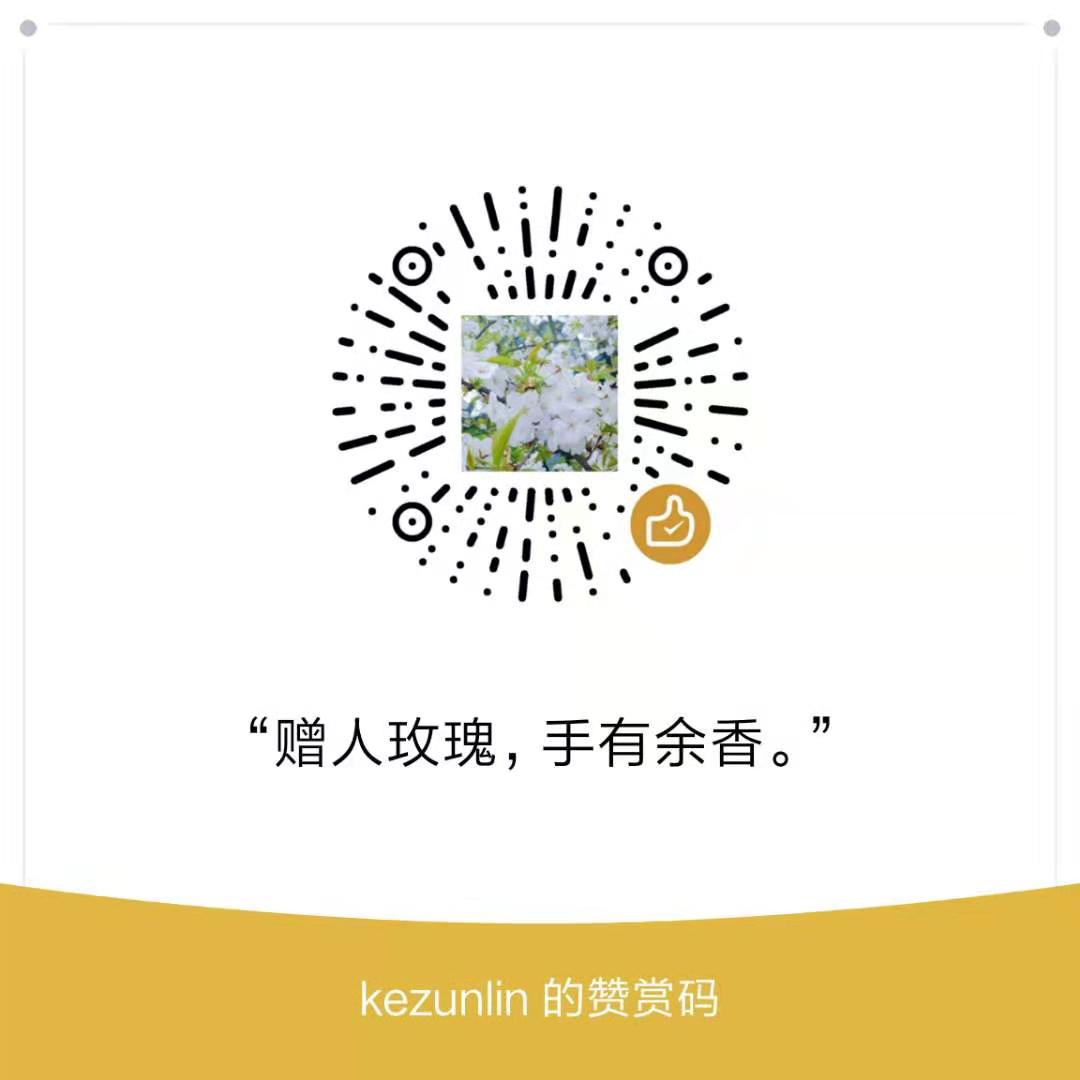

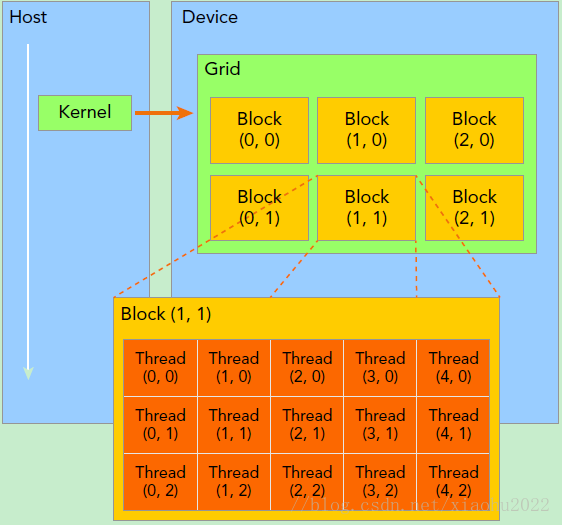

在异构计算架构中,GPU与CPU通过PCIe总线连接在一起来协同工作,CPU所在位置称为为主机端(host),而GPU所在位置称为设备端(device),如下图所示。

基于CPU+GPU的异构计算平台可以优势互补,CPU负责处理逻辑复杂的串行程序,而GPU重点处理数据密集型的并行计算程序,从而发挥最大功效。

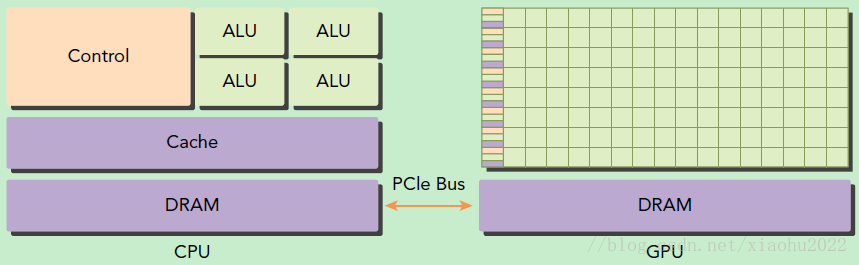

CUDA编程模型基础

- host: CPU,Memory

- device: GPU,Memory

CUDA程序中既包含host程序,又包含device程序,它们分别在CPU和GPU上运行。同时,host与device之间可以进行通信,这样它们之间可以进行数据拷贝。典型的CUDA程序的执行流程如下:

- 分配host内存,并进行数据初始化;

- 分配device内存,并从host将数据拷贝到device上;

- 调用CUDA的核函数(kernel function)在device上完成指定的运算;

- 将device上的运算结果拷贝到host上;

- 释放device和host上分配的内存。

kernel

kernel是CUDA中一个重要的概念,kernel是在device上线程中并行执行的函数,核函数用__global__符号声明,在调用时需要用<<<grid, block>>>来指定kernel要执行的线程数量,在CUDA中,每一个线程都要执行核函数,并且每个线程会分配一个唯一的线程号thread ID,这个ID值可以通过核函数的内置变量threadIdx来获得。

由于GPU实际上是异构模型,所以需要区分host和device上的代码,在CUDA中是通过函数类型限定词开区别host和device上的函数,主要的三个函数类型限定词如下:

__global__:在device上执行,从host中调用(一些特定的GPU也可以从device上调用),返回类型必须是void,不支持可变参数,不能成为类成员函数。注意用__global__定义的kernel是异步的,这意味着host不会等待kernel执行完就执行下一步。__device__:在device上执行,单仅可以从device中调用,不可以和__global__同时用。__host__:在host上执行,仅可以从host上调用,一般省略不写,不可以和__global__同时用,但可和__device__同时用,此时函数会在device和host都编译。

grid/block/thread

dim3 grid(3, 2);

dim3 block(5, 3);

kernel_fun<<< grid, block >>>(prams...);

The key is in CUDA’s

<<<1, 1>>>syntax. This is called the execution configuration, and it tells the CUDA runtime how many parallel threads to use for the launch on the GPU.

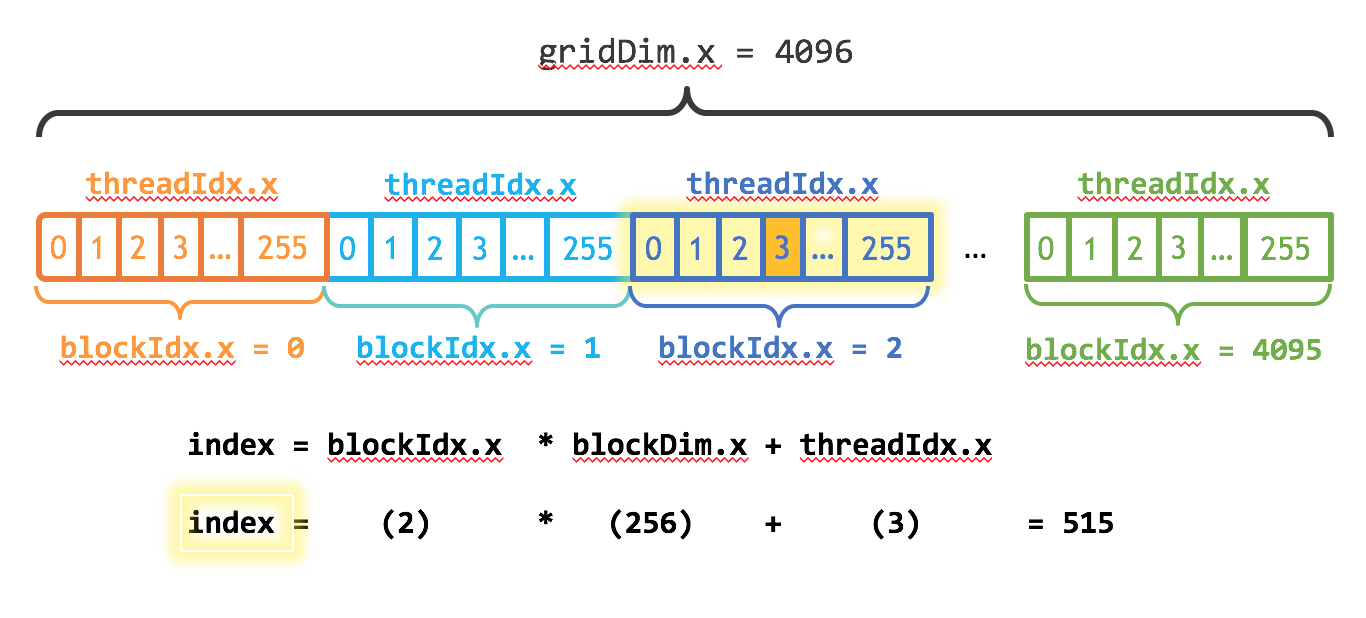

builtin variables

- threadIdx

- blockIdx

- blockDim

- gridDim

对于一个2-dim的block(Dx,Dy),线程(x,y)的ID值为(x+y∗Dx),

如果是3-dim的block(Dx,Dy,Dz),线程(x,y,z)的ID值为(x+y∗Dx+z∗Dx∗Dy)。

matrix add

# kernel function

__global__ void MatAdd(float A[N][N], float B[N][N], float C[N][N])

{

int col = blockIdx.x * blockDim.x + threadIdx.x;

int row = blockIdx.y * blockDim.y + threadIdx.y;

if (col < N && row < N)

C[row][col] = A[row][col] + B[row][col];

}

int main()

{

...

// Kernel config

dim3 threadsPerBlock(16, 16);

dim3 numBlocks(N / threadsPerBlock.x, N / threadsPerBlock.y);

// kernel call

MatAdd<<<numBlocks, threadsPerBlock>>>(A, B, C);

...

}

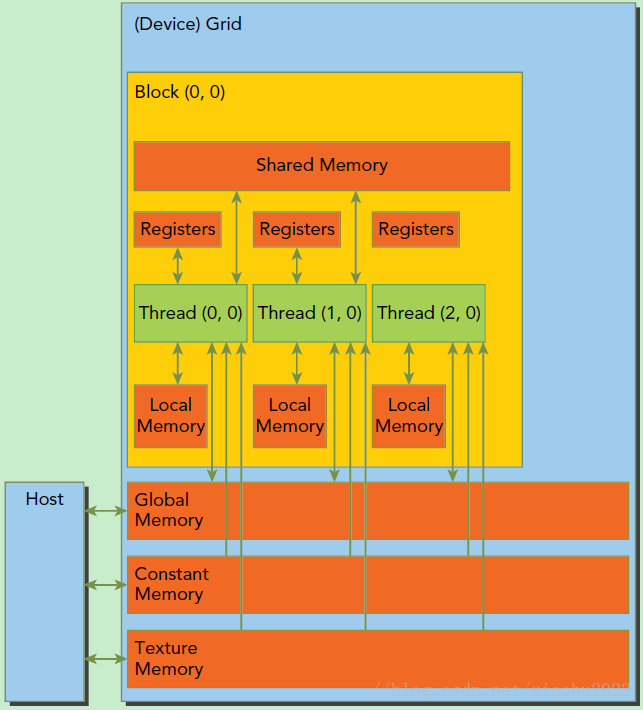

CUDA内存模型

gpu memory

logical/physical layer

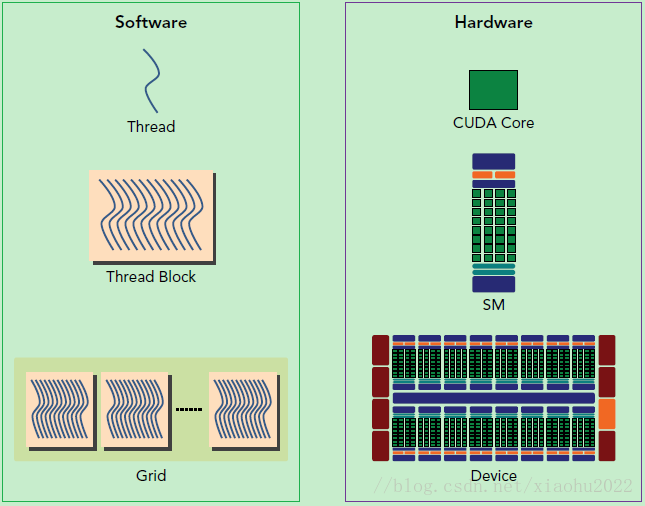

SP最基本的处理单元,Streaming Processor,也称为CUDA core。SM是英文名是Streaming Multiprocessor,翻译过来就是流式多处理器。- 一个kernel的各个线程块有可能被分配多个

SM,所以grid只是逻辑层,而SM才是执行的物理层。SM采用的是SIMT(Single-Instruction, Multiple-Thread,单指令多线程)架构,基本的执行单元是线程束(wraps),线程束包含32个线程,这些线程同时执行相同的指令,但是每个线程都包含自己的指令地址计数器和寄存器状态,也有自己独立的执行路径。 - 由于

SM的基本执行单元是包含32个线程的线程束,所以block大小一般要设置为32的倍数。

- 每个thread由每个SP执行

- 每个thread block由SM执行

- 一个kernel其实由一个grid来执行,一个kernel一次只能在一个GPU上执行

Code

see cuda-demo

CMakeLists.txt

cmake_minimum_required (VERSION 2.8.7)

project (CudaExample)

enable_language(C)

enable_language(CXX)

set(CMAKE_CXX_STANDARD 11)

# Set the output folder where your program will be created

set(CMAKE_BINARY_DIR ${CMAKE_SOURCE_DIR}/bin)

set(EXECUTABLE_OUTPUT_PATH ${CMAKE_BINARY_DIR})

set(LIBRARY_OUTPUT_PATH ${CMAKE_BINARY_DIR})

find_package(CUDA REQUIRED) # user-defined

MESSAGE( [Main] " CUDA_LIBRARIES = ${CUDA_LIBRARIES}")

MESSAGE( [Main] " CUDA_INCLUDE_DIRS = ${CUDA_INCLUDE_DIRS}")

# The following folder will be included

include_directories(

${CUDA_INCLUDE_DIRS}

)

set(CUDA_NVCC_FLAGS "-g -G")

set(GENCODE -gencode=arch=compute_61,code=sm_61)

cuda_add_executable(demo src/demo.cu OPTIONS ${GENCODE})

target_link_libraries(demo ${CUDA_LIBRARIES})

#cuda_add_library(gpu SHARED ${CURRENT_HEADERS} ${CURRENT_SOURCES})

#cuda_add_library(gpu STATIC ${CURRENT_HEADERS} ${CURRENT_SOURCES})

vector add

#include <stdlib.h>

#include <iostream>

#include <cuda_runtime.h>

using namespace std;

/*

https://blog.csdn.net/fb_help/article/details/79330815

foo.cuh + foo.cu

*/

// function to add the elements of two arrays

void add(int n, float *a, float *b, float *c)

{

for (int i = 0; i < n; i++)

c[i] = a[i] + b[i];

}

__global__ void kernel_add(int n, float *a, float *b, float *c)

{

// thread id

int i = blockDim.x * blockIdx.x + threadIdx.x;

c[i] = a[i] + b[i];

}

__global__ void kernel_add2(int n, float *a, float *b, float *c)

{

// thread id

int index = blockDim.x * blockIdx.x + threadIdx.x;

// grid-stride loop

int grid_stride = blockDim.x * gridDim.x; // 256*4096

// in this case; only 1 loop

for (int i = index; i < n; i += grid_stride)

{

c[i] = a[i] + b[i];

}

}

void device_info()

{

int deviceCount;

cudaGetDeviceCount(&deviceCount);

for (int i = 0;i<deviceCount;i++)

{

cudaDeviceProp devProp;

cudaGetDeviceProperties(&devProp, i);

std::cout << "使用GPU device " << i << ": " << devProp.name << std::endl;

std::cout << "设备全局内存总量: " << devProp.totalGlobalMem / 1024 / 1024 << "MB" << std::endl;

std::cout << "SM的数量:" << devProp.multiProcessorCount << std::endl;

std::cout << "每个SM的最大线程数:" << devProp.maxThreadsPerMultiProcessor << std::endl;

std::cout << "每个SM的最大线程束数(warps):" << devProp.maxThreadsPerMultiProcessor / 32 << std::endl;

std::cout << "每个线程块(Block)的共享内存大小:" << devProp.sharedMemPerBlock / 1024.0 << " KB" << std::endl;

std::cout << "每个线程块(Block)的最大线程数:" << devProp.maxThreadsPerBlock << std::endl;

std::cout << "每个线程块(Block)可用的32位寄存器数量: " << devProp.regsPerBlock << std::endl;

std::cout << "======================================================" << std::endl;

}

}

void test_cpu()

{

float *A, *B, *C;

int n = 1024 * 1024;

int size = n * sizeof(float);

// CPU端分配内存

A = (float*)malloc(size);

B = (float*)malloc(size);

C = (float*)malloc(size);

// 初始化数组

for (int i = 0;i<n;i++)

{

A[i] = 90.0;

B[i] = 10.0;

}

// Run kernel on 1M elements on the CPU

add(n, A, B, C);

// 校验误差

float max_error = 0.0;

for (int i = 0;i<n;i++)

{

max_error += fabs(100.0 - C[i]);

}

cout << "max error is " << max_error << endl;

// 释放CPU端的内存

free(A);

free(B);

free(C);

}

/*

cudaMalloc+cudaMemcpy+cudaFree

*/

int test_gpu_1()

{

float*A, *Ad, *B, *Bd, *C, *Cd;

int n = 1024 * 1024;

int size = n * sizeof(float);

// CPU端分配内存

A = (float*)malloc(size);

B = (float*)malloc(size);

C = (float*)malloc(size);

// 初始化数组

for(int i=0;i<n;i++)

{

A[i] = 90.0;

B[i] = 10.0;

}

// GPU端分配内存

cudaMalloc((void**)&Ad, size);

cudaMalloc((void**)&Bd, size);

cudaMalloc((void**)&Cd, size);

// CPU的数据拷贝到GPU端

cudaMemcpy(Ad, A, size, cudaMemcpyHostToDevice);

cudaMemcpy(Bd, B, size, cudaMemcpyHostToDevice);

cudaMemcpy(Bd, B, size, cudaMemcpyHostToDevice);

// 1-dim

// 定义kernel执行配置,(1024*1024/512)个block,每个block里面有512个线程

int block_size = 512;

int num_of_blocks = (n + block_size - 1) / block_size;

dim3 dimBlock(block_size);

dim3 dimGrid(num_of_blocks);

// 执行kernel

kernel_add<<<dimGrid, dimBlock>>>(n, Ad, Bd, Cd);

// 将在GPU端计算好的结果拷贝回CPU端

cudaMemcpy(C, Cd, size, cudaMemcpyDeviceToHost);

// 校验误差

float max_error = 0.0;

for(int i=0;i<n;i++)

{

max_error += fabs(100.0 - C[i]);

}

cout << "max error is " << max_error << endl;

// 释放CPU端、GPU端的内存

free(A);

free(B);

free(C);

cudaFree(Ad);

cudaFree(Bd);

cudaFree(Cd);

return 0;

}

/*

cudaMallocManaged+cudaDeviceSynchronize+cudaFree

*/

void test_gpu_2()

{

float*A, *B, *C;

int n = 1024 * 1024;

int size = n * sizeof(float);

// Allocate Unified Memory – accessible from CPU or GPU

cudaMallocManaged((void**)&A, size);

cudaMallocManaged((void**)&B, size);

cudaMallocManaged((void**)&C, size);

// 初始化数组

for (int i = 0;i<n;i++)

{

A[i] = 90.0;

B[i] = 10.0;

}

// 1-dim

// 定义kernel执行配置,(1024*1024/512)个block,每个block里面有512个线程

int block_size = 512;

int num_of_blocks = (n + block_size - 1) / block_size;

dim3 dimBlock(block_size);

dim3 dimGrid(num_of_blocks);

// 执行kernel

kernel_add2 << <dimGrid, dimBlock >> >(n, A, B, C);

// Wait for GPU to finish before accessing on host

cudaDeviceSynchronize(); // block until the GPU has finished all tasks

// 校验误差

float max_error = 0.0;

for (int i = 0;i<n;i++)

{

max_error += fabs(100.0 - C[i]);

}

cout << "max error is " << max_error << endl;

// Free Unified Memory

cudaFree(A);

cudaFree(B);

cudaFree(C);

}

int main()

{

device_info();

test_cpu();

test_gpu_1();

test_gpu_2();

return 0;

}

notes for block_size and num_of_blocks

int block_size = 512;

int num_of_blocks = (n + block_size - 1) / block_size; // 4096

dim3 dimBlock(block_size);

dim3 dimGrid(num_of_blocks);

notes for grid-stride loop

__global__ void kernel_add2(int n, float *a, float *b, float *c)

{

// thread id

int index = blockDim.x * blockIdx.x + threadIdx.x;

// grid-stride loop

int grid_stride = blockDim.x * gridDim.x; // 256*4096

// in this case; only 1 loop

for (int i = index; i < n; i += grid_stride)

{

c[i] = a[i] + b[i];

}

}

nvprof

nvprof.exe demo.exe

==8748== Profiling application: .\demo.exe

==8748== Profiling result:

Time(%) Time Calls Avg Min Max Name

43.63% 1.6413ms 3 547.10us 517.71us 591.41us [CUDA memcpy HtoD]

30.11% 1.1327ms 1 1.1327ms 1.1327ms 1.1327ms [CUDA memcpy DtoH]

26.26% 987.80us 2 493.90us 243.43us 744.37us kernel_add(int, float*, float*, float*)

at

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v8.0\bin\nvprof.exe

matrix multiply

- for 1-dim vector add, we use 1-dim grid and block

- for 2-dim matrix multiply, we use 2-dim grid and block.

// ========================================

// 2-dim

// ========================================

// 矩阵类型,行优先,M(row, col) = *(M.elements + row * M.width + col)

struct Matrix

{

int width;

int height;

float *elements;

};

// 获取矩阵A的(row, col)元素

__device__ float getElement(Matrix *A, int row, int col)

{

return A->elements[row * A->width + col];

}

// 为矩阵A的(row, col)元素赋值

__device__ void setElement(Matrix *A, int row, int col, float value)

{

A->elements[row * A->width + col] = value;

}

// 矩阵相乘kernel,2-D,每个线程计算一个元素

__global__ void matMulKernel(Matrix *A, Matrix *B, Matrix *C)

{

float sum = 0.0;

int row = threadIdx.y + blockIdx.y * blockDim.y;

int col = threadIdx.x + blockIdx.x * blockDim.x;

for (int i = 0; i < A->width; ++i)

{

sum += getElement(A, row, i) * getElement(B, i, col);

}

setElement(C, row, col, sum);

}

void test_gpu_3()

{

int width = 1 << 8;

int height = 1 << 8;

Matrix *A, *B, *C;

// 申请托管内存

cudaMallocManaged((void**)&A, sizeof(Matrix));

cudaMallocManaged((void**)&B, sizeof(Matrix));

cudaMallocManaged((void**)&C, sizeof(Matrix));

int nBytes = width * height * sizeof(float);

cudaMallocManaged((void**)&A->elements, nBytes);

cudaMallocManaged((void**)&B->elements, nBytes);

cudaMallocManaged((void**)&C->elements, nBytes);

// 初始化数据

A->height = height;

A->width = width;

B->height = height;

B->width = width;

C->height = height;

C->width = width;

for (int i = 0; i < width * height; ++i)

{

A->elements[i] = 1.0;

B->elements[i] = 2.0;

}

// 定义kernel的执行配置

dim3 blockSize(32, 32);

dim3 gridSize(

(width + blockSize.x - 1) / blockSize.x,

(height + blockSize.y - 1) / blockSize.y

);

// 执行kernel

matMulKernel<<<gridSize, blockSize>>>(A, B, C);

// 同步device 保证结果能正确访问

cudaDeviceSynchronize();

// 检查执行结果

float maxError = 0.0;

for (int i = 0; i < width * height; ++i)

maxError += fabs(C->elements[i] - 2 * width);

cout << "max error is " << maxError << endl;

// 释放托管内存

cudaFree(A->elements);

cudaFree(B->elements);

cudaFree(C->elements);

cudaFree(A);

cudaFree(B);

cudaFree(C);

}

int main()

{

test_gpu_3();

return 0;

}

notes for

dim3 blockSize(32, 32);

dim3 gridSize(

(width + blockSize.x - 1) / blockSize.x,

(height + blockSize.y - 1) / blockSize.y

);

Reference

History

- 20181121: created.